von Neumann bottleneck

What is the von Neumann bottleneck?

The von Neumann bottleneck is a limitation on throughput caused by the standard personal computer architecture. The term is named for John von Neumann, who developed the theory behind the architecture of modern computers. Earlier computers were fed programs and data for processing while they were running.

In 1945, von Neumann proposed a computer design that was based on the concept of the stored program computer, in which program instructions and data are held in memory. Known as the von Neumann architecture -- or sometimes the Princeton architecture -- this model became the standard used for many of the computers to follow and continues to be used for a large number of today's systems.

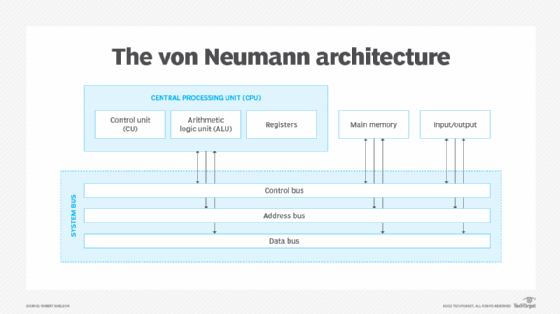

In the von Neumann architecture, a computer's main components include the central processing unit (CPU), the memory unit, and the input and output devices. The CPU contains the control unit, arithmetic logic unit and registers. The processor and memory are separate components, with data moving between them via the system bus. The memory unit, often referred to as main memory or primary memory, stores both the program instructions and data.

The system bus is used to transfer all data between the components that make up the von Neumann architecture, creating what has become an increasing bottleneck as workloads have changed and data sets have grown larger. Over the years, computer components have evolved to try to meet the needs of these changing workloads. For example, processor speeds are significantly faster, and memory supports greater densities, making it possible to store more data in less space.

In contrast to these improvements, transfer rates between the CPU and memory have made only modest gains. As a result, the processor is spending more of its time sitting idle, waiting for data to be fetched from memory. No matter how fast a given processor can work, it is limited by the rate of transfer allowed by the system bus. A faster processor usually means that it will spend more time sitting idle.

Overcoming the von Neumann bottleneck

The von Neumann bottleneck has often been considered a problem that can be overcome only through significant changes to computer or processor architectures. Even so, there have been numerous attempts to address the limitations of the existing structure:

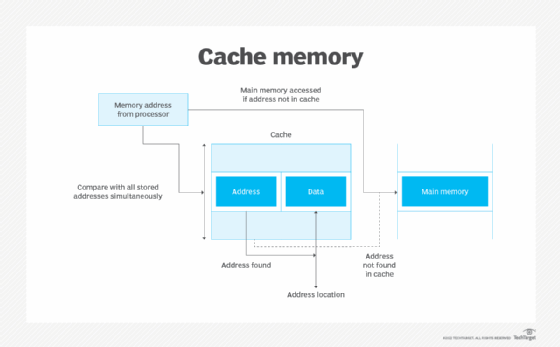

- Caching. A common method for addressing the bottleneck has been to add caches to the CPU. In a typical cache configuration, the L1, L2 and L3 cache levels sit between the processor core and the main memory to help speed up operations. The L1 cache is the smallest, fastest and most expensive. The L3 cache, which is shared among multiple processor cores, is the largest, slowest and least expensive. The L2 cache falls somewhere between the two.

- Prefetching. Instructions and data that are expected to be used first are fetched into the cache in advance so they're immediately available when needed.

- Speculative execution. The processor performs specific tasks before it is prompted to perform them so the information is ready when needed. Speculative execution uses branch prediction to estimate which instructions will likely be needed first.

- Multithreading. The processor manages multiple requests simultaneously, while switching execution between threads. The multithreading process usually happens so quickly that the threads appear to be running simultaneously.

- New types of RAM. Current developments in RAM technologies promise to help address at least part of the bottleneck issues by getting the data into the bus more quickly. Emerging areas of development include resistive RAM, magnetic RAM, ferroelectric RAM and spin-transfer torque RAM.

- Near-data processing. With NDP, memory and storage are augmented with processing capabilities that help improve performance, while reducing dependency on the system bus. One type of NDP is processing in memory, which integrates a processor and memory in a single microchip.

- Hardware acceleration. Processing is shifted to other hardware devices to reduce the load on the CPU and dependency on the system bus. Common types of hardware acceleration include GPUs, application-specific integrated circuits and field-programmable gate arrays.

- System-on-a-chip. A single chip contains processing, memory and other system resources, eliminating much of the data transfer on the system bus. Mobile devices and embedded systems use SoC technology extensively. However, the technology is now making its way into the computer industry, with Apple silicon leading the way.

See also: instruction, input/output (I/O), read-only memory (ROM), neuromorphic chip, singularity (the), neuromorphic computing.