cache thrash

What is cache thrash?

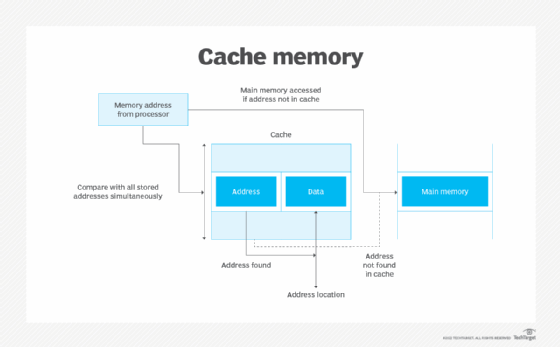

Cache thrash is an issue caused by an ongoing computer activity that fails to progress due to excessive use of resources or conflicts in the caching system. This flaw prevents client processes from taking advantage of the caching system. It can also lead to the undesirable eviction of useful data from the cache. Cache thrashing is a common problem in parallel processing architectures, where each central processing unit has its local cache.

When a CPU cache is constantly updated with new data, it can result in frequent cache misses or data evictions. Also, when the CPU tries to repeatedly access data whose size is larger than the cache size, the data gets pushed out of the cache even if the intent is to use it. This is known as cache thrashing. The situation forces the CPU to access the main memory -- which is slower than the cache -- more often. This affects the processor's performance and efficiency.

There are many reasons why cache thrashing can happen. One is inappropriate cache size. High contention, poor locality and suboptimal cache replacement policies can also cause cache thrashing.

What happens during cache thrashing?

During cache thrashing, the computer will typically take the same actions over and over in an attempt to complete the desired task. A key sign of cache thrashing is high CPU usage or a system that seems to be running very slowly.

False sharing in the CPU cache line mechanism is another sign of cache thrashing. This happens when multiple CPUs or CPU cores work on variables stored on the same cache line -- a block of data bytes read by the CPU, either from cache levels or the main memory -- but each CPU works on a different variable, resulting in the invalidation of the cache line for a CPU. Multiple occurrences of false sharing can affect system performance or cause it to stall.

When cache thrashing occurs, one CPU process diverts resources from another process, which in turn must take resources from another process in a vicious cycle, if the total resources available are insufficient. For example, a translation lookaside buffer (TLB) might have to be completely reloaded to capture data on each sweep if the CPU cannot address the total number of pages involved. This means there is no TLB cache reuse, leading to inefficiency and long load times. In this case, thrashing can be occurring even if there are no page faults.

Cache thrashing and context switching

Context switching is the procedure to change from one task to another to enable multitasking while avoiding any conflict. Cache thrash can sometimes be related to context switching. The underlying cause might be that thread pools are not sized properly in servlet containers, or the algorithms used might be a poor match for the type of data being accessed.

Like cache thrashing, cache pollution is another common cache efficiency problem. It occurs when the CPU accesses a lot of data that won't be needed in the future. This causes the cache to push out the data that is likely to be needed soon to make room for the data that won't be needed soon, again affecting CPU speed and performance.

How to avoid cache thrashing

Methods such as cache coherency protocols -- directory-based or message-based -- that permit multiple caches to share a memory copy of a data item can limit thrashing in some use cases. Cache coherency, also called cache coherence, is particularly important for multicore or multiprocessor systems where several CPUs share the same caches. It prevents data inconsistencies and ensures all copies of a data item remain up to date in different caches, ultimately ensuring the system's reliability and maintaining its performance. In such systems, cache coherence also ensures the CPU accesses the most up-to-date version of the data, regardless of whether it resides in RAM or in another CPU's cache.

Another way to avoid cache thrashing is by improving the spatial and temporal locality, which can be done by optimizing the CPU code and data structures. Using different cache levels to reduce cache contention and optimizing the cache replacement policy to match the workload are two other ways to minimize occurrences of cache thrashing. Another way is to optimize the cache size -- for example, by first determining its size and then splitting large amounts of data into smaller blocks that can fit inside the cache.

Some other ways to prevent cache thrashing include the following:

- Cache coloring. Also known as page coloring, cache coloring involves getting the operating system to determine which bits in a physical address might affect the location of cache lines in each set in the cache, and then matching these bits in the page's virtual address to the corresponding bits in the page's physical address.

- Flushing cache lines. A useful technique that frees entries in the cache for more important data and prevents unnecessary caching, such as caching for data that's unlikely to be used again soon.

- Prefetching data. Programmers can request data to be prefetched, or loaded into the cache to avoid cache misses. Some CPUs can also automatically prefetch cache lines based on certain data access patterns.

CPU manufacturers try to avoid the cache thrashing problem by keeping separate caches for separate purposes. A common method is to implement separate L1 caches for instructions and data. Such separation prevents problems with one data cache from affecting the efficiency of the other. Another method is to include CPU instructions to prefetch data into the caches, explicitly remove data from the cache or write data directly to RAM while bypassing the cache. These instructions can help to reduce cache efficiency problems including cache thrashing and cache pollution.

Learn about application programming interface caching and the critical API caching practices all developers should know.