NVMe over Fabrics (NVMe-oF)

What is NVMe over Fabrics (NVMe-oF)?

NVMe over Fabrics, also known as NVMe-oF and non-volatile memory express over fabrics, is a protocol specification designed to connect hosts to storage across a network fabric using the NVMe network protocol.

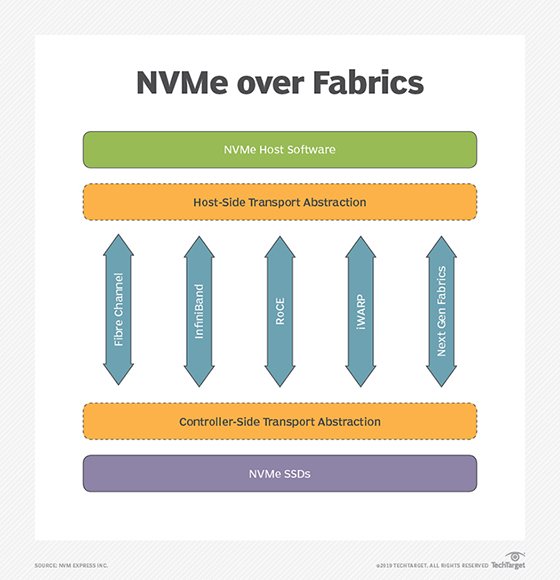

Using an NVMe message-based command, this protocol enables data transfers between a host computer and a target solid-state storage device or system over a network. Data can be transferred using Ethernet, Fibre Channel (FC) or InfiniBand.

NVMe-oF enables an organization to have a high-performance storage network with low latency, high throughput and reduced use of central processing units by application host servers.

The nonprofit organization NVM Express Inc. published version 1.0 of the NVMe specification on March 1, 2011. On June 5, 2016, it published version 1.0 of the NVMe-oF specification. NVMe version 1.3, which was released in May 2017, added features to enhance security, resource sharing and solid-state drive (SSD) endurance. NVMe 2.0 was released in June 2021 and added features such as Endurance Group Management, Key Values and Zoned Namespaces (ZNS).

NVM Express Inc. estimated that 90% of the NVMe-oF protocol is the same as the SSD industry standard NVMe protocol, which is designed for local use over a computer's Peripheral Component Interconnect Express (PCIe) bus.

Since the initial development of NVMe-oF, there have been several implementations of the protocol, including NVMe-oF using remote direct memory access (RDMA), FC or TCP/IP.

Uses of NVMe over Fabrics

Although it's still a relatively young technology, NVMe-oF has been widely incorporated into network architectures. NVMe-oF can help provide a state-of-the-art storage protocol that can take full advantage of today's SSDs. The protocol can also help bridge the gaps between direct-attached storage and storage area networks, enabling organizations to support workloads that require high throughput and low latency.

NVMe flash cards initially replaced traditional SSDs as storage media. This arrangement offers promising high-performance improvements when compared with existing all-flash storage, but it also has its drawbacks. NVMe requires third-party software tools to optimize write endurance and data services. Bottlenecks persist in NVMe arrays at the level of the storage controller.

Other use cases for NVMe-oF include optimizing real-time analytics, as well as roles in artificial intelligence and machine learning.

The use of NVMe-oF is a relatively new phase in the evolution of the technology, paving the way for the arrival of rack-scale flash systems that integrate native, end-to-end data management. The pace of mainstream adoption depends on how quickly across-the-stack development of the NVMe ecosystem occurs.

Benefits of NVMe over Fabrics

NVMe-based storage drives offer the following advantages:

- Low latency.

- Additional parallel requests.

- Increased overall performance.

- Reduction of length for operating system (OS) storage stacks on the server side.

- Improvements to storage array performance.

- Faster end solution with a move from Serial-Attached SCSI (SAS)/Serial Advanced Technology Attachment (SATA) drives to NVMe SSDs.

- Variety of implementation types for different scenarios.

Technical characteristics of NVMe over Fabrics

Technical characteristics of NVMe-oF include the following:

- High speed.

- Low latency over networks.

- Credit-based flow control.

- Ability to scale out up to thousands of other devices.

- Multipath support of the fabric to enable multiple paths between the NVMe host initiator and storage target simultaneously.

- Multihost support of the fabric to enable sending and receiving of commands from multiple hosts and storage subsystems simultaneously.

NVMe over Fabrics vs. NVMe: Key differences

NVMe is an alternative to the Small Computer System Interface (SCSI) standard for connecting and transferring data between a host and a peripheral target storage device or system. NVMe is designed for use with faster media, such as SSDs and post-flash memory-based technologies. The NVMe standard speeds access times by several orders of magnitude compared to the SCSI and SATA protocols developed for rotating media.

NVMe supports 64,000 queues, each with a queue depth of up to 64,000 commands. All input/output (I/O) commands, along with the subsequent responses, operate on the same processor core, parlaying multicore processors into a high level of parallelism. I/O locking isn't required since each application thread gets a dedicated queue.

NVMe-based devices transfer data using a PCIe serial expansion slot, meaning there's no need for a dedicated hardware controller to route network storage traffic. Using NVMe, a host-based PCIe SSD can transfer data more efficiently to a storage target or subsystem.

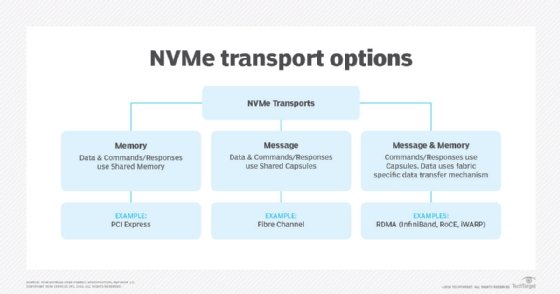

One of the main distinctions between NVMe and NVMe-oF is the transport-mapping mechanism for sending and receiving commands or responses. NVMe-oF uses a message-based model for communicating between a host and a target storage device. Local NVMe maps commands and responses to shared memory in the host over the PCIe interface protocol.

While it mirrors the performance characteristics of PCIe Gen 3, NVMe lacks a native messaging layer to direct traffic between remote hosts and NVMe SSDs in an array. NVMe-oF is the industry's response to developing a messaging layer.

NVMe over Fabrics using RDMA

NVMe-oF use of RDMA is defined by a technical subgroup of the NVM Express organization. Mappings available include RDMA over Converged Ethernet and Internet Wide Area RDMA Protocol for Ethernet and InfiniBand.

RDMA is a memory-to-memory transport mechanism between two computers. Data is sent from one memory address space to another without invoking the OS or processor. Lower overhead and faster access and response time to queries are the result, with latency usually in microseconds.

NVMe serves as the protocol to move storage traffic across RDMA over Fabrics. The protocol provides a common language for compute servers and storage to communicate regarding the transfer of data.

NVMe-oF using RDMA essentially requires implementing a new storage network that bumps up performance. The tradeoff is reduced scalability compared to the FC protocol.

NVMe over Fabrics using Fibre Channel

NVMe over Fabrics using Fibre Channel (FC-NVMe) was developed by the T11 committee of the International Committee for Information Technology Standards (INCITS). FC enables the mapping of other protocols on top of it, such as NVMe, SCSI and IBM's proprietary Fibre Connection, to send data and commands between host and target storage devices.

FC-NVMe and Gen 5, 6 or 7 FC can coexist in the same infrastructure, enabling data centers to avoid a forklift upgrade.

Organizations can use firmware to upgrade existing FC network switches, provided the host bus adapters support 16 gigabits per second or 32 Gbps FC and NVMe-oF-capable storage targets.

The FC protocol supports access to shared NVMe flash, but there's a performance hit imposed to interpret and translate encapsulated SCSI commands to NVMe commands. The Fibre Channel Industry Association is helping to drive standards for backward-compatible FC-NVMe implementations, enabling a single FC-NVMe adapter to support SCSI-based disks, traditional SSDs and PCIe-connected NVMe flash cards.

NVMe over Fabrics using TCP/IP

One of the newer developments includes NVMe-oF using TCP/IP. NVMe-oF can now support TCP transport binding. NVMe over TCP makes it possible to use NVMe-oF across a standard Ethernet network. There's also no need to make configuration changes or implement any special equipment with the use of NVMe-oF with TCP/IP. Because the transport binding can be used over any Ethernet network or the internet, the challenges commonly involved in implementing additional equipment and configurations are eliminated.

TCP is a widely accepted standard for establishing and maintaining network communications when exchanging data across a network. TCP works in conjunction with IP, as both protocols used together facilitate communications across the internet and private networks. The TCP transport binding in NVMe-oF defines how the data between a host and a non-volatile memory subsystem are encapsulated and delivered.

The TCP binding also defines how queues, capsules and data are mapped, which supports TCP communications between NVMe-oF hosts and controllers through IP networks.

NVMe-oF using TCP/IP is a good choice for organizations that want to use their Ethernet infrastructure. This also lets developers migrate NVMe technology away from Internet SCSI. As an example, an organization that doesn't want to deal with any potential hassles included in implementing NVMe-oF using RDMA can instead take advantage of NVMe-oF using TCP/IP on a Linux kernel.

Storage industry support for NVMe and NVMe-oF

Established storage vendors and startups are competing for a position within the market. All-flash NVMe and NVMe-oF storage products include the following:

- DataDirect Networks Flashscale, which supports NVMe devices.

- Dell EMC PowerMax NVMe Storage, with each PowerMax disk array enclosure able to hold 24 2.5-inch NVMe SSDs.

- Hewlett Packard Enterprise Nimble Storage platform and HPE NVMe Mainstream Performance Read Intensive SSDs, which support NVMe.

- IBM FlashSystem V9000, which supports NVMe-oF using a FC adapter.

- NetApp Fabric-Attached Storage arrays, which support NVMe and NVMe-oF with the A700 upgrade.

- Pure Storage FlashArray//X, which supports NVMe storage.

- DataDirect Networks IntelliFlash, which also supports NVMe storage.

Startups have also launched NVMe all-flash arrays, with some later being acquired by larger organizations:

- E8 Storage -- acquired by Amazon in 2019 -- uses its software to replicate snapshots from the E8-D24 NVMe flash array to attached branded compute servers, a design that aims to reduce management overhead on the array. After its purchase by Amazon, E8's technology and team merged with AWS.

- Excelero -- acquired by Nvidia in 2022 -- offers software-defined storage, including a product called NVMesh, which supports NVMe, InfiniBand and NVMe-oF storage.

Chipmakers, network vendors prep the market

Existing chipmakers and network vendors involved in NVMe-oF include the following:

- Intel Corp., among other drive-makers, led the way with dual-ported 3D NAND-based NVMe SSDs. Intel also offers Storage Performance Development Kit Perf, which supports local NVMe and remote NVMe-oF devices.

- Marvell offers an NVMe-oF SSD Converter Controller called the Marvell 88SN2400. This controller enables scalable, high-performance storage by providing low-latency access to SSDs over a fabric. It works with NVMe-oF NAND and storage class memory SSDs, as well as with SAS and SATA disk configurations.

- Micron offers a series of NVMe SSDs. Even though the company discontinued 3D XPoint, it's testing its 7300 NVMe SSD in NVMe-oF environments using Marvell's 88SN2400 controller.

- Nvidia Networking, which, in 2019, acquired Mellanox Technologies, provides an NVMe-oF storage reference architecture based on its BlueField system-on-a-chip programmable processors. The company also offers SmartNIC Software-defined Network Accelerated Processing (SNAP), which virtualizes NVMe storage. SNAP also enables the deployment of NVMe-oF technology. The first storage vendors to adopt NVMe SNAP are all-flash NVMe startups E8 Storage and Excelero.

- Seagate Technology has developed the first NVMe hard disk drive (HDD) -- prior to which NVMe was only associated with SSDs. NVMe HDDs provide a launching point for HDD architectures that work with NVMe-oF, taking mass amounts of storage pools and making them available to component composers.

Learn about the evolution of NVMe-oF on Ethernet-attached devices.