How to calculate data center cooling requirements

Data center cooling requirements are affected by several factors, including the equipment's heat output, floor area, facility design and electrical system power rating.

Environmental effects can severely impact data center equipment. Excessive heat buildup damages servers, causing them to shut down automatically. Regularly operating them at higher-than-acceptable temperatures shortens their life span and leads to more frequent replacement.

It's not just high temperatures that are a danger. High humidity leads to condensation, corrosion and contaminant buildup, such as dust, gathering on equipment in a data center. Meanwhile, low humidity leads to electrostatic discharges between two objects that damage equipment, too.

A properly calibrated cooling system can prevent these issues and keep your data center at the correct temperature and humidity 24/7. It ultimately reduces operational risk from damaged equipment.

Here's how your organization can determine what cooling standards the data center needs.

Data center cooling standards

ASHRAE develops and publishes thermal and humidity guidelines for data centers. The latest edition outlines the temperatures and humidity levels at which you can reliably operate a data center based on the equipment classification.

In the most recent guidelines, ASHRAE recommended that IT equipment be used with the following:

- Temperatures between 18 and 27 degrees Celsius or 64.4 to 80.6 degrees Fahrenheit.

- Dew point of -9 to 15 C or 15.8 to 59 degrees F.

- Relative humidity of 60%.

Determining the proper environment for IT equipment depends on its classification -- A1 to A4 -- which is based on the type of equipment it is and how it should run, in descending order of sensitivity. A1 equipment refers to enterprise servers and other storage devices that require the strictest environmental control. The A4 class applies to PCs, storage products, workstations and volume servers and has the broadest range of allowable temperatures and humidity.

Previous versions of these guidelines focused on reliability and uptime rather than energy costs. To align with data centers' increasing focus on energy-saving techniques and efficiency, ASHRAE developed classes that better outline the environmental and energy impact.

How to calculate data center cooling requirements

To calculate your data center cooling needs, you need several pieces of data: the total heat output of equipment, floor area in square feet (ft2), facility design and electrical system power rating.

One thing to remember is that some older equipment might have been designed to older ASHRAE cooling standards. If your data center has a mix of equipment, you must figure out an acceptable temperature and humidity range for all the equipment in your facility.

Here's a general calculation you can start with to get a baseline British thermal unit (Btu) cooling size:

(Room square footage x 20) + (IT equipment watt usage x 3.14) + (Active people in the room x 400)

But this is just a starting point. If you want a more accurate estimate and plan for your facility's future cooling needs, keep reading.

Measuring the heat output

Heat can be expressed using various measures, including British thermal units, tons (t) and watts (W). If your equipment uses multiple units, you must convert them to a common format for comparison.

Here's a quick unit conversion chart:

| To convert... | Multiply by... |

| British thermal units/hour into watts | 0.293071 |

| Watts into British thermal units/hour | 3.412142 |

| Tons into watts | 3,516.852842 |

| Watts into tons | 0.000284 |

Generally speaking, the power consumed by an IT device is nearly all converted into heat, while the power sent through data lines is negligible. That means the thermal output of the device in watts is equal to its power consumption.

Heat output special cases

Because some devices generate heat differently than the general rule of "their power consumption equals their heat output," you must calculate them separately:

- Lighting. Like IT equipment, the watt output of lighting roughly equals the heat output. Take this number, and multiply it by 4.25 to determine the lighting in British thermal units. If you have light-emitting diode lighting, reduce this total by one-third.

- Windows. If your data facility has windows, you must calculate how much heat is generated by sunlight from all windows. A general calculation is 60 Btu/hour/ft2 of window. ASHRAE considers location, hours of sunlight, building materials, window materials, refraction rates and more in their calculations.

- External heat (on walls, roofs, etc.). Externally facing walls or the roof can affect the total heat output in a data center, especially large ones. Consult ASHRAE's guidelines on how to handle this.

- People. Multiply the maximum number of people who'd be in the facility at any time by 400 to determine the total occupant in British thermal units.

- Uninterruptible power supply (UPS) systems. Even though these systems and units don't normally run at 100% capacity, use their maximum capacity when calculating heat output, as that could be a factor if they're in use.

- Power distribution systems. These systems only give off a portion of their stated power usage as heat, so use this formula to calculate its heat output: (0.02 x power system rating) + (0.02 x total IT load power).

- Voice over IP (VoIP) routers. Up to one-third of a VoIP router's power consumption is sent to remote terminals, so reduce its max power output by one-third for cooling calculations.

- HVAC and other cooling systems. Cooling fans and compressors in AC systems can create substantial heat. However, it's almost immediately released outdoors rather than inside the data center, so they can be ignored generally.

Calculating the total heat output

Once you've gathered all the requisite data, you can simply add them up to determine your total cooling requirements for the data center.

If you're using British thermal units as your base unit, you must divide your total by 3,412.141633 to determine the total cooling required in kilowatts (kW).

Other environmental factors

Beyond the special environmental factors mentioned previously, a few other factors can influence a data center's heat output calculations. Ignoring them could lead to an incorrectly sized cooling system and increase your overall cooling investment.

Air humidity

HVAC systems are often designed to control humidity and remove heat. Ideally, they keep a constant humidity level, yet the air-cooling function often creates substantial condensation and a loss of humidity. This being the case, many data centers use supplemental humidification equipment to make up for this loss, adding more heat.

Large data centers with significant air mixing -- the mixing of hot and cold air from areas inside the facility -- generally need supplemental humidification. The cooling system must help compensate for the movement of the hotter air in the facility. As a result, these data centers must oversize their cooling systems by up to 30%.

Condensation isn't always an issue in smaller data centers or wiring closets, so the cooling system might be able to handle humidification on its own through the regular return ducting already in place. The return ducts eliminate the risk of condensation by design so the HVAC system can operate at 100% cooling capacity.

Oversized cooling

A data center's cooling needs can change over time, so you should consider oversizing your cooling system for future growth. Oversizing also has the benefits of being used for redundancy if part of the cooling system fails at some point or if you must take part of it down for maintenance. Generally speaking, HVAC consultants recommend adding as much redundancy as your budget allows or at least one more unit than your calculations say you need.

HVAC consultants typically multiply the heat output of all IT equipment by 1.5 to enable future expansion.

Sample calculations of data center cooling requirements

Here are a couple of sample cooling calculations using various standard metrics.

1. An overall data center cooling calculation

Assume the following sample information for a typical data center.

| Item | Calculation | Total |

| Floor area | 3,000 ft2 (3,000 x 20) | 60,000 Btu or 17.6 kW |

| Servers and racks | 150 racks with 8 servers each (150 x 8) | 1,200 servers |

| Server power consumption | 625 W each (1,200 x 625) | 750 kW |

| UPS with battery power consumption | Maximum capacity of 1,755 Btu/hour | 0.5 kW (1 kW = 3,412.141633 Btu/hr, so 1,755 / 3,412.141633 = 0.5 kW) |

| Lighting | (15,000 W x 4.25) / 3,412.141633 | 63,750 Btu or 18.7 kW |

| Windows | 2,500 ft2 of windows (2,500 x 60 Btu/hour) | 150,000 Btu/hour or 44 kW |

| People | A maximum of 50 employees in the data center at any given time (50 x 400 Btu/hour) | 5.9 kW |

| Grand total | 17.6 + 750 + 0.5 + 18.7 + 44 + 5.9 | 836.7 kW of max cooling |

Because most HVAC systems are sized in tons, we can use the standard conversion equations (W x 3.412142 = Btu/hour) and (Btu/hour / 12,000 = t of cooling):

- 836.7 kW = 836,700 W x 3.412142 = 2,854,939 Btu/hour.

- 2,854,939 Btu/hour / 12,000 = 237.9 t of max cooling needed.

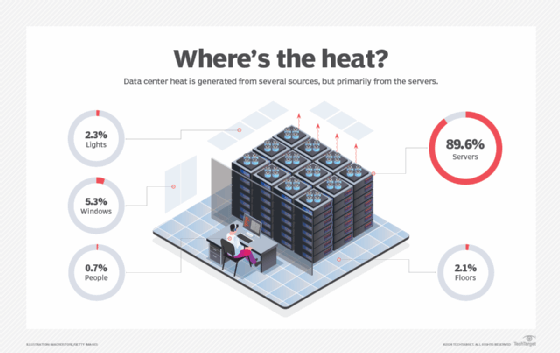

Here's a visual of how that breaks down among components, systems, people and more.

In this example data center, the UPS system generates so little heat, even at maximum use, that it's not even 1% of the total heat output. The rest of the IT equipment generates most of it.

2. Calculations for a small server room or data closet

In this example, there's a small server room, data closet or mini edge data center that might be found in a generic office tower in a large city. These calculations can help determine your cooling requirements in watts, and any power system rating in kilovolt-ampere (kVA) can be roughly considered the same as the total power output of the device.

| Item | Heat calculation | Output subtotal |

| General IT equipment:

|

Same as total IT power load in watts | (5 x 2,400) + (2 x 18) = 12,036 W |

| UPS with battery (5 units at 0.9 kVA power system rating) | (0.04 x power system rating) + (0.06 x total IT load power) | (0.04 x 0.9) + (0.06 x 12,036) = 722.2 W |

| Power distribution system with 8.6 kVA | (0.02 x power system rating) + (0.02 x total IT load power) | (0.02 x 8.6) + (0.02 x 12,036) = 240.9 W |

| Lighting for a 10 ft x 15 ft x 10 ft room | 2 x floor area (ft2), or 21.53 x floor area (square meter) | 2 x (10 x 15 x 10) = 3,000 W |

| People (150 people total in the facility) | 400 x max # of people | (400 Btu/hr / 3.412142) x 150 = 17,584.3 W |

| Total cooling watts needed | 12,036 + 722.2 + 240.9 + 3,000 + 17,584.3 | 33,583.4 W or 33.6 kW |

Converting these into tons of cooling requires using the standard conversion equations (W x 3.412142 = Btu/hour) and (Btu/hour / 12,000 = t of cooling), so the space needs a total of the following:

- 33,583.4 W x 3.412142 = 114,591.3 Btu/hour.

- 114,591.3 Btu/hour / 12,000 = 9.5 t of cooling needed.

To determine the future cooling needs of this data closet, multiply the total IT heat output by 1.5, so 12,036 W x 1.5 = 18,054 W. Adding this new number to the existing ones gives us a future total cooling requirement of 39,601.4 W or 11.3 t of cooling. That's nearly a 20% increase.

As the modern data center changes and evolves from the large, centralized data center of a decade ago to the small, nimble edge computing data center many enterprises are building today, cooling requirements often remain the same. Concentrating that much technology in a single location requires planning an adequate cooling strategy that works for today and into the near future.

Data center cooling requirements are affected by this and more, such as the increased density of the racks, technology deployments in the facility and number of staff working there. Having a better understanding of what affects cooling can make any data center professional more knowledgeable about designing the right cooling plan for the organization's needs.

Editor's note: This article was written in 2022. It was updated in 2024 to improve the reader experience.

Julia Borgini is a freelance technical copywriter and content marketing strategist who helps B2B technology companies publish valuable content.

Jacob Roundy is a freelance writer and editor, specializing in a variety of technology topics, including data centers and sustainability.