- Essentials

- Getting Started

- Datadog

- Datadog Site

- DevSecOps

- Serverless for AWS Lambda

- Agent

- Integrations

- Containers

- Dashboards

- Monitors

- Logs

- APM Tracing

- Profiler

- Tags

- API

- Service Catalog

- Session Replay

- Continuous Testing

- Synthetic Monitoring

- Incident Management

- Database Monitoring

- Cloud Security Management

- Cloud SIEM

- Application Security Management

- Workflow Automation

- CI Visibility

- Test Visibility

- Intelligent Test Runner

- Learning Center

- Support

- Glossary

- Standard Attributes

- Guides

- Agent

- Integrations

- OpenTelemetry

- Developers

- Authorization

- DogStatsD

- Custom Checks

- Integrations

- Create an Agent-based Integration

- Create an API Integration

- Create a Log Pipeline

- Integration Assets Reference

- Build a Marketplace Offering

- Create a Tile

- Create an Integration Dashboard

- Create a Recommended Monitor

- Create a Cloud SIEM Detection Rule

- OAuth for Integrations

- Install Agent Integration Developer Tool

- Service Checks

- IDE Plugins

- Community

- Guides

- API

- Datadog Mobile App

- CoScreen

- Cloudcraft

- In The App

- Dashboards

- Notebooks

- Monitors and Alerting

- Infrastructure

- Metrics

- Watchdog

- Bits AI

- Service Catalog

- API Catalog

- Error Tracking

- Service Management

- Infrastructure

- Application Performance

- APM

- Continuous Profiler

- Database Monitoring

- Data Streams Monitoring

- Data Jobs Monitoring

- Digital Experience

- Real User Monitoring

- Product Analytics

- Synthetic Testing and Monitoring

- Continuous Testing

- Software Delivery

- CI Visibility

- CD Visibility

- Test Visibility

- Intelligent Test Runner

- Code Analysis

- Quality Gates

- DORA Metrics

- Security

- Security Overview

- Cloud SIEM

- Cloud Security Management

- Application Security Management

- AI Observability

- Log Management

- Observability Pipelines

- Log Management

- Administration

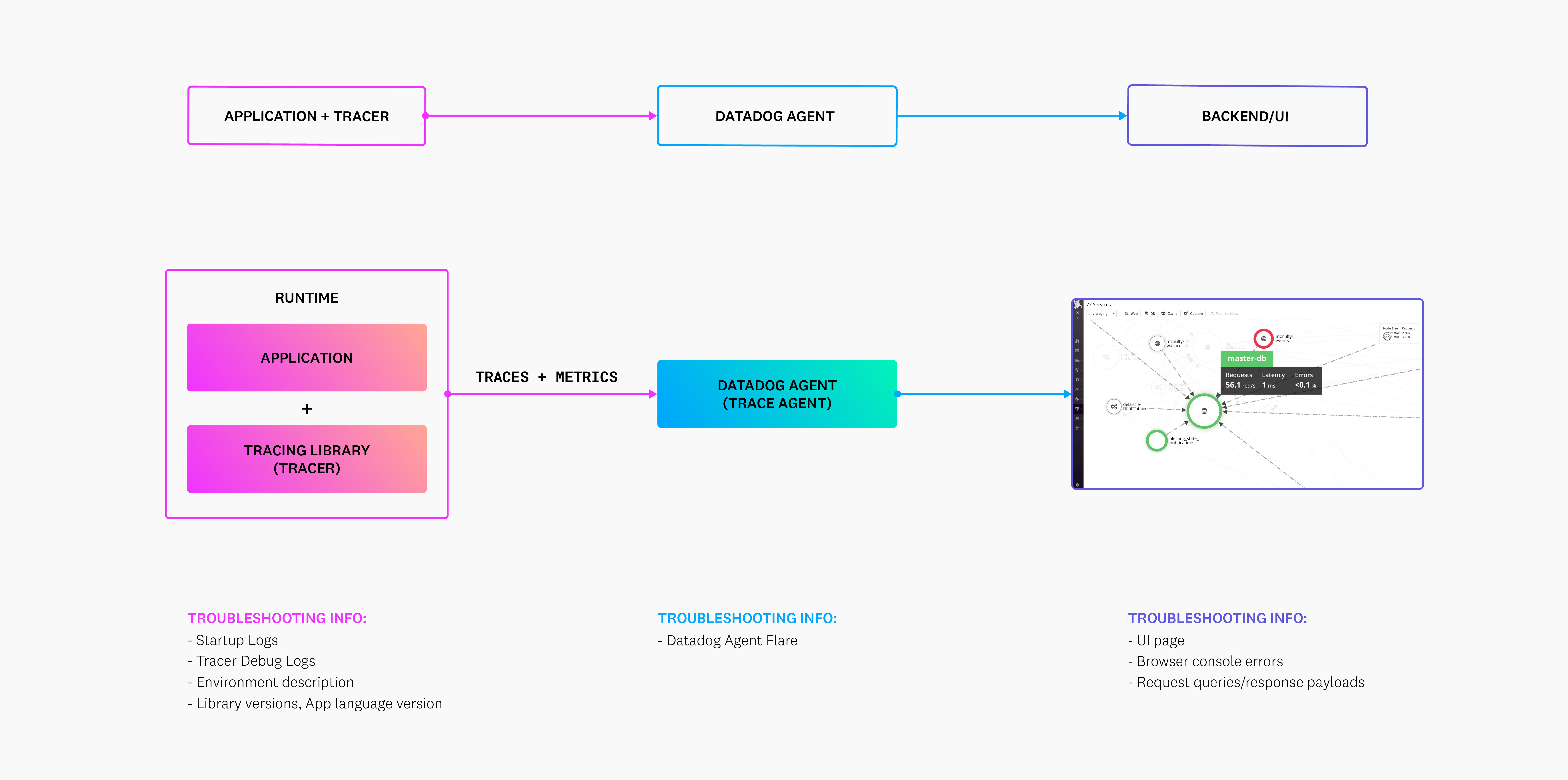

APM Troubleshooting

If you experience unexpected behavior with Datadog APM, there are a few common issues you can investigate and this guide may help resolve issues quickly. If you continue to have trouble, reach out to Datadog support for further assistance. Datadog recommends regularly updating to the latest version of the Datadog tracing libraries you use, as each release contains improvements and fixes.

Troubleshooting pipeline

The following components are involved in sending APM data to Datadog:

Traces (JSON data type) and Tracing Application Metrics are generated from the application and sent to the Datadog Agent before traveling to the backend. Different troubleshooting information can be collected at each section of the pipeline. Importantly, the Tracer debug logs are written to your application’s logs, which is a separate component from the Datadog Agent flare. More information about these items can be seen below in Troubleshooting data requested by Datadog Support.

Confirm APM setup and Agent status

During startup, Datadog tracing libraries emit logs that reflect the configurations applied in a JSON object, as well as any errors encountered, including if the Agent can be reached in languages where this is possible. Some languages require these startup logs to be enabled with the environment variable DD_TRACE_STARTUP_LOGS=true. For more information on startup logs, see the dedicated page for troubleshooting.

Connection errors

A common source of trouble is the inability of the instrumented application to communicate with the Datadog Agent. Read about how to find and fix these problems in Connection Errors.

Tracer debug logs

To capture full details on the Datadog tracer, enable debug mode on your tracer by using the DD_TRACE_DEBUG environment variable. You might enable it for your own investigation or because Datadog support recommended it for triage purposes. However, don’t leave debug mode always enabled because of the logging overhead it introduces.

These logs can surface instrumentation errors or integration-specific errors. For details on enabling and capturing these debug logs, see the debug mode troubleshooting page.

Data volume guidelines

Your instrumented application can submit spans with timestamps up to 18 hours in the past and two hours in the future from the current time.

Datadog truncates the following strings if they exceed the indicated number of characters:

Additionally, the number of span tags present on any span cannot exceed 1024.

For a given 40 minute interval, Datadog accepts the following combinations. To accommodate larger volumes, contact support to discuss your use case.

- 5000 unique environments and service combinations

- 30 unique second primary tag values per environment

- 100 unique operation names per environment and service

- 1000 unique resources per environment, service, and operation name

- 30 unique versions per environment and service

APM rate limits

Within Datadog Agent logs, if you see error messages about rate limits or max events per second, you can change these limits by following these instructions. If you have questions, before you change the limits, consult with the Datadog support team.

APM resource usage

Read about detecting trace collection CPU usage and about calculating adequate resource limits for the Agent in Agent Resource Usage.

Modifying, discarding, or obfuscating spans

There are a number of configuration options available to scrub sensitive data or discard traces corresponding to health checks or other unwanted traffic that can be configured within the Datadog Agent, or in some languages the Tracing Client. For details on the options available, see Security and Agent Customization. While this offers representative examples, if you require assistance applying these options to your environment, reach out to Datadog Support.

Service naming convention issues

If the number of services exceeds what is specified in the data volume guidelines, try following these best practices for service naming conventions.

Exclude environment tag values from service names

By default, the environment (env) is the primary tag for Datadog APM.

A service is typically deployed in multiple environments, such as prod, staging, and dev. Performance metrics like request counts, latency, and error rate differ across various environments. The environment dropdown in the Service Catalog allows you to scope the data in the Performance tab to a specific environment.

One pattern that often leads to issues with an overwhelming number of services is including the environment value in service names. For example, you might have two unique services instead of one since they are operating in two separate environments: prod-web-store and dev-web-store.

Datadog recommends tuning your instrumentation by renaming your services.

Trace metrics are unsampled, which means your instrumented application shows all data instead of subsections of them. The volume guidelines are also applied.

Use the second primary tag instead of putting metric partitions or grouping variables into service names

Second primary tags are additional tags that you can use to group and aggregate your trace metrics. You can use the dropdown to scope the performance data to a given cluster name or data center value.

Including metric partitions or grouping variables in service names instead of applying the second primary tag unnecessarily inflates the number of unique services in an account and results in potential delay or data loss.

For example, instead of the service web-store, you might decide to name different instances of a service web-store-us-1, web-store-eu-1, and web-store-eu-2 to see performance metrics for these partitions side-by-side. Datadog recommends implementing the region value (us-1, eu-1, eu-2) as a second primary tag.

Troubleshooting data requested by Datadog Support

When you open a support ticket, our support team may ask for some combination of the following types of information:

How are you confirming the issue? Provide links to a trace (preferably) or screenshots, for example, and tell support what you expect to see.

This allows Support to confirm errors and attempt to reproduce your issues within Datadog’s testing environments.

Startup logs are a great way to spot misconfiguration of the tracer, or the inability for the tracer to communicate with the Datadog Agent. By comparing the configuration that the tracer sees to the one set within the application or container, Support can identify areas where a setting is not being properly applied.

Tracer debug logs go one step deeper than startup logs, and help to identify if integrations are instrumenting properly in a manner that can’t necessarily be checked until traffic flows through the application. Debug logs can be extremely useful for viewing the contents of spans created by the tracer and can surface an error if there is a connection issue when attempting to send spans to the agent. Tracer debug logs are typically the most informative and reliable tool for confirming nuanced behavior of the tracer.

A Datadog Agent flare (snapshot of logs and configs) that captures a representative log sample of a time period when traces are sent to your Datadog Agent while in debug or trace mode depending on what information you are looking for in these logs.

Datadog Agent flares enables you to see what is happening within the Datadog Agent, for example, if traces are being rejected or malformed. This does not help if traces are not reaching the Datadog Agent, but does help identify the source of an issue, or any metric discrepancies.

When adjusting the log level to

debugortracemode, take into consideration that these significantly increase log volume and therefore consumption of system resources (namely storage space over the long term). Datadog recommends these only be used temporarily for troubleshooting purposes and the level be restored toinfoafterward.Note: If you are using the Datadog Agent v7.19+ and the Datadog Helm Chart with the latest version, or a DaemonSet where the Datadog Agent and trace-agent are in separate containers, you will need to run the following command with

log_level: DEBUGorlog_level: TRACEset in yourdatadog.yamlto get a flare from the trace-agent:trace-agent.sh

kubectl exec -it <agent-pod-name> -c trace-agent -- agent flare <case-id> --localA description of your environment

Knowing how your application is deployed helps the Support team identify likely issues for tracer-agent communication problems or misconfigurations. For difficult issues, Support may ask to a see a Kubernetes manifest or an ECS task definition, for example.

Custom code written using the tracing libraries, such as tracer configuration, custom instrumentation, and adding span tags

Custom instrumentation can be a powerful tool, but also can have unintentional side effects on your trace visualizations within Datadog, so support may ask about this to rule it out as a suspect.

Additionally, asking for your automatic instrumentation and configuration allows Datadog to confirm if this matches what it is seeing in both tracer startup and debug logs.

Versions of the:

- programming language, frameworks, and dependencies used to build the instrumented application

- Datadog Tracer

- Datadog Agent

Knowing what versions are being used allows us to ensure integrations are supported in our Compatiblity Requirements section, check for known issues, or to recommend a tracer or language version upgrade if it will address the problem.

Further Reading

Additional helpful documentation, links, and articles: