Folders and files Name Name Last commit message

Last commit date

parent directory

View all files

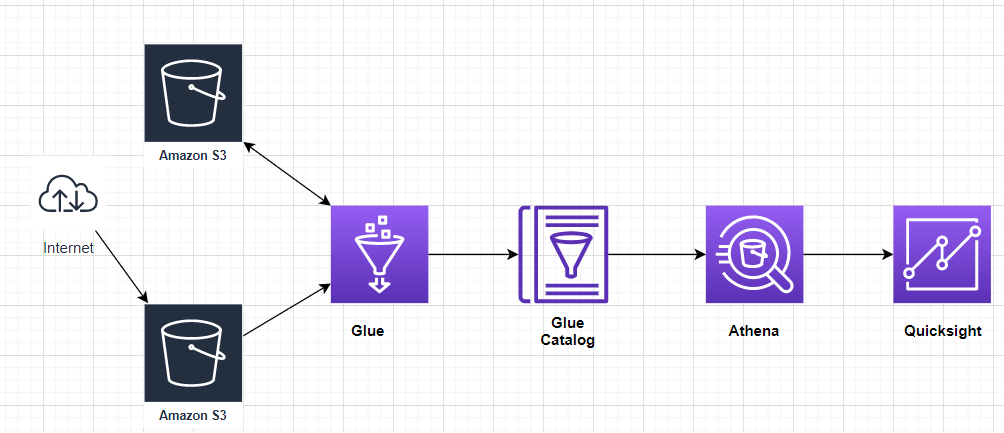

Data pipeline with Snowflake

Upload and Store the required data in S3 bucket

Create a pipeline using Glue

Write queries in Athena and build visualizations in Amazon Quick sight

Downloading data from AWS s3 bucket requires:

pip install boto3

pip install s3fs

To web scrape using Beautiful Soup:

Create Access Key in AWS

Create a Storage Bucket in S3 and upload scrapped Storm data and Sevir data in their respective S3 bucket

Create a pipeline using Glue

In the AWS, select Glue and schedule a glue job to create a combined dataset and push it into S3 bucket.

Make use of Glue crawler to fetch the data from S3.

Once the Crawler is created run it and it will create Glue Datalog and tables.

View Query using Athena and Visualize using Quick sight

Now, make use of Athena by connecting it with table created by crawler and hit queries.

Lastly, view the result using Amazon Quicksight

You can’t perform that action at this time.