Planet Python

Last update: July 11, 2024 07:43 PM UTC

July 11, 2024

Python Software Foundation

Announcing Our New Infrastructure Engineer

We are excited to announce that Jacob Coffee has joined the Python Software Foundation staff as an Infrastructure Engineer bringing his experience as an Open Source maintainer, dedicated homelab maintainer, and professional systems administrator to the team. Jacob will be the second member of our Infrastructure staff, reporting to Director of Infrastructure, Ee Durbin.

Joining our team, Jacob will share the responsibility of maintaining the PSF systems and services that serve the Python community, CPython development, and our internal operations. This will add crucially needed redundancy to the team as well as capacity to undertake new initiatives with our infrastructure.

Jacob shares, “I’m living the dream by supporting the PSF mission AND working in open source! I’m thrilled to be a part of the PSF team and deepen my contributions to the Python community.”

In just the first few days, Jacob has already shown initiative on multiple projects and issues throughout the infrastructure and we’re excited to see the impact he’ll have on the PSF and broader Python community. We hope that you’ll wish him a warm welcome as you see him across the repos, issue trackers, mailing lists, and discussion forums!

Nicola Iarocci

Microsoft MVP

Last night, I was at an outdoor theatre with Serena, watching Anatomy of a Fall (an excellent film). Outdoor theatres are becoming rare, which is a pity, and Arena del Sole is lovely with its strong vintage, 80s vibe. There’s little as pleasant as watching a film under the stars with your loved one on a quiet summer evening.

Anyway, in the pause, I glanced at my e-mails and discovered I had been again granted the Microsoft MVP Award. It is the ninth consecutive year, and I’m grateful and happy the journey continues. At this point, I should put in some extra effort to reach the 10-year milestone next year.

Robin Wilson

Searching an aerial photo with text queries – a demo and how it works

Summary: I’ve created a demo web app where you can search an aerial photo of Southampton, UK using text queries such as "roundabout", "tennis court" or "ship". It uses vector embeddings to do this – which I explain in this blog post.

In this post I’m going to try and explain a bit more about how this works.

Firstly, I should explain that the only data used for the searching is the aerial image data itself – even though a number of these things will be shown on the OpenStreetMap map, none of that data is used, so you can also search for things that wouldn’t be shown on a map (like a blue bus)

The main technique that lets us do this is vector embeddings. I strongly suggest you read Simon Willison’s great article/talk on embeddings but I’ll try and explain here too. An embedding model lets you turn a piece of data (for example, some text, or an image) into a constant-length vector – basically just a sequence of numbers. This vector would look something like [0.283, -0.825, -0.481, 0.153, ...] and would be the same length (often hundreds or even thousands of elements long) regardless how long the data you fed into it was.

In this case, I’m using the SkyCLIP model which produces vectors that are 768 elements long. One of the key features of these vectors are that the model is trained to produce similar vectors for things that are similar in some way. For example, a text embedding model may produce a similar vector for the words "King" and "Queen", or "iPad" and "tablet". The ‘closer’ a vector is to another vector, the more similar the data that produced it.

The SkyCLIP model was trained on image-text pairs – so a load of images that had associated text describing what was in the image. SkyCLIP’s training data "contains 5.2 million remote sensing image-text pairs in total, covering more than 29K distinct semantic tags" – and these semantic tags and the text descriptions of them were generated from OpenStreetMap data.

Once we’ve got the vectors, how do we work out how close vectors are? Well, we can treat the vectors as encoding a point in 768-dimensional space. That’s a bit difficult to visualise – so imagine a point in 2- or 3-dimensional space as that’s easier, plotted on a graph. Vectors for similar things will be located physically closer on the graph – and one way of calculating similarity between two vectors is just to measure the multi-dimensional distance on a graph. In this situation we’re actually using cosine similarity, which gives a number between -1 and +1 representing the similarity of two vectors.

So, we now have a way to calculate an embedding vector for any piece of data. The next step we take is to split the aerial image into lots of little chunks – we call them ‘image chips’ – and calculate the embedding of each of those chunks, and then compare them to the embedding calculated from the text query.

I used the RasterVision library for this, and I’ll show you a bit of the code. First, we generate a sliding window dataset, which will allow us to then iterate over image chips. We define the size of the image chip to be 200×200 pixels, with a ‘stride’ of 100 pixels which means each image chip will overlap the ones on each side by 100 pixels. We then configure it to resize the output to 224×224 pixels, which is the size that the SkyCLIP model expects as input.

ds = SemanticSegmentationSlidingWindowGeoDataset.from_uris(

image_uri=uri,

image_raster_source_kw=dict(channel_order=[0, 1, 2]),

size=200,

stride=100,

out_size=224,

)We then iterate over all of the image chips, run the model to calculate the embedding and stick it into a big array:

dl = DataLoader(ds, batch_size=24)

EMBEDDING_DIM_SIZE = 768

embs = torch.zeros(len(ds), EMBEDDING_DIM_SIZE)

with torch.inference_mode(), tqdm(dl, desc='Creating chip embeddings') as bar:

i = 0

for x, _ in bar:

x = x.to(DEVICE)

emb = model.encode_image(x)

embs[i:i + len(x)] = emb.cpu()

i += len(x)

# normalize the embeddings

embs /= embs.norm(dim=-1, keepdim=True)

embs.shapeWe also do a fair amount of fiddling around to get the locations of each chip and store those too.

Once we’ve stored all of those (I’ll get on to storage in a moment), we need to calculate the embedding of the text query too – which can be done with code like this:

text = tokenizer(text_queries)

with torch.inference_mode():

text_features = model.encode_text(text.to(DEVICE))

text_features /= text_features.norm(dim=-1, keepdim=True)

text_features = text_features.cpu()It’s then ‘just’ a matter of comparing the text query embedding to the embeddings of all of the image chips, and finding the ones that are closest to each other.

To do this, we can use a vector database. There are loads of different vector databases to choose from, but I’d recently been to a tutorial at PyData Southampton (I’m one of the co-organisers, and I strongly recommend attending if you’re in the area) which used the Pinecone serverless vector database, and they have a fairly generous free tier, so I thought I’d try that.

Pinecone, like all other vector databases, allows you to insert a load of vectors and their metadata (in this case, their location in the image) into the database, and then search the database to find the vectors closest to a ‘search vector’ you provide.

I won’t bother showing you all the code for this side of things: it’s fairly standard code for calling Pinecone APIs, mostly copied from their tutorials.

I then wrapped this all up in a FastAPI API, and put a simple Javascript front-end on it to display the results on a Leaflet web map. I also added some basic caching to stop us hitting the Pinecone API too frequently (as there is limit to the number of API calls you can make on the free plan). And that’s pretty-much it.

I hope the explanation made sense: have a play with the app here and post a comment with any questions.

July 10, 2024

Real Python

How Do You Choose Python Function Names?

One of the hardest decisions in programming is choosing names. Programmers often use this phrase to highight the challenges of selecting Python function names. It may be an exaggeration, but there’s still a lot of truth in it.

There are some hard rules you can’t break when naming Python functions and other objects. There are also other conventions and best practices that don’t raise errors when you break them, but they’re still important when writing Pythonic code.

Choosing the ideal Python function names makes your code more readable and easier to maintain. Code with well-chosen names can also be less prone to bugs.

In this tutorial, you’ll learn about the rules and conventions for naming Python functions and why they’re important. So, how do you choose Python function names?

Get Your Code: Click here to download the free sample code that you’ll use as you learn how to choose Python function names.

In Short: Use Descriptive Python Function Names Using snake_case

In Python, the labels you use to refer to objects are called identifiers or names. You set a name for a Python function when you use the def keyword.

When creating Python names, you can use uppercase and lowercase letters, the digits 0 to 9, and the underscore (_). However, you can’t use digits as the first character. You can use some other Unicode characters in Python identifiers, but not all Unicode characters are valid. Not even 🐍 is valid!

Still, it’s preferable to use only the Latin characters present in ASCII. The Latin characters are easier to type and more universally found on most keyboards. Using other characters rarely improves readability and can be a source of bugs.

Here are some syntactically valid and invalid names for Python functions and other objects:

| Name | Validity | Notes |

|---|---|---|

number |

Valid | |

first_name |

Valid | |

first name |

Invalid | No whitespace allowed |

first_10_numbers |

Valid | |

10_numbers |

Invalid | No digits allowed at the start of names |

_name |

Valid | |

greeting! |

Invalid | No ASCII punctuation allowed except for the underscore (_) |

café |

Valid | Not recommended |

你好 |

Valid | Not recommended |

hello⁀world |

Valid | Not recommended—connector punctuation characters and other marks are valid characters |

However, Python has conventions about naming functions that go beyond these rules. One of the core Python Enhancement Proposals, PEP 8, defines Python’s style guide, which includes naming conventions.

According to PEP 8 style guidelines, Python functions should be named using lowercase letters and with an underscore separating words. This style is often referred to as snake case. For example, get_text() is a better function name than getText() in Python.

Function names should also describe the actions being performed by the function clearly and concisely whenever possible. For example, for a function that calculates the total value of an online order, calculate_total() is a better name than total().

You’ll explore these conventions and best practices in more detail in the following sections of this tutorial.

What Case Should You Use for Python Function Names?

Several character cases, like snake case and camel case, are used in programming for identifiers to name the various entities. Programming languages have their own preferences, so the right style for one language may not be suitable for another.

Python functions are generally written in snake case. When you use this format, all the letters are lowercase, including the first letter, and you use an underscore to separate words. You don’t need to use an underscore if the function name includes only one word. The following function names are examples of snake case:

find_winner()save()

Both function names include lowercase letters, and one of them has two English words separated by an underscore. You can also use the underscore at the beginning or end of a function name. However, there are conventions outlining when you should use the underscore in this way.

You can use a single leading underscore, such as with _find_winner(), to indicate that a function is meant only for internal use. An object with a leading single underscore in its name can be used internally within a module or a class. While Python doesn’t enforce private variables or functions, a leading underscore is an accepted convention to show the programmer’s intent.

A single trailing underscore is used by convention when you want to avoid a conflict with existing Python names or keywords. For example, you can’t use the name import for a function since import is a keyword. You can’t use keywords as names for functions or other objects. You can choose a different name, but you can also add a trailing underscore to create import_(), which is a valid name.

You can also use a single trailing underscore if you wish to reuse the name of a built-in function or other object. For example, if you want to define a function that you’d like to call max, you can name your function max_() to avoid conflict with the built-in function max().

Unlike the case with the keyword import, max() is not a keyword but a built-in function. Therefore, you could define your function using the same name, max(), but it’s generally preferable to avoid this approach to prevent confusion and ensure you can still use the built-in function.

Double leading underscores are also used for attributes in classes. This notation invokes name mangling, which makes it harder for a user to access the attribute and prevents subclasses from accessing them. You’ll read more about name mangling and attributes with double leading underscores later.

Read the full article at https://realpython.com/python-function-names/ »

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

Quiz: Choosing the Best Font for Programming

In this quiz, you’ll test your understanding of how to choose the best font for your daily programming. You’ll get questions about the technicalities and features to consider when choosing a programming font and refresh your knowledge about how to spot a high-quality coding font.

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

July 09, 2024

PyCoder’s Weekly

Issue #637 (July 9, 2024)

#637 – JULY 9, 2024

View in Browser »

Python Grapples With Apple App Store Rejections

A string that is part of the urllib parser module in Python references a scheme for apps that use the iTunes feature to install other apps, which is disallowed. Auto scanning by Apple is rejecting any app that uses Python 3.12 underneath. A solution has been proposed for Python 3.13.

JOE BROCKMEIER

Python’s Built-in Functions: A Complete Exploration

In this tutorial, you’ll learn the basics of working with Python’s numerous built-in functions. You’ll explore how you can use these predefined functions to perform common tasks and operations, such as mathematical calculations, data type conversions, and string manipulations.

REAL PYTHON

Python Error and Performance Monitoring That Doesn’t Suck

With Sentry, you can trace issues from the frontend to the backend—detecting slow and broken code, to fix what’s broken faster. Installing the Python SDK is super easy and PyCoder’s Weekly subscribers get three full months of the team plan. Just use code “pycoder” on signup →

SENTRY sponsor

Constraint Programming Using CP-SAT and Python

Constraint programming is the process of looking for solutions based on a series of restrictions, like employees over 18 who have worked the cash before. This article introduces the concept and shows you how to use open source libraries to write constraint solving code.

PHILIPPE OLIVIER

Register for PyOhio, July 27-28

PYOHIO.ORG • Shared by Anurag Saxena

Discussions

Articles & Tutorials

A Search Engine for Python Packages

Finding the right Python package on PyPI can be a bit difficult, since PyPI isn’t really designed for discovering packages easily. PyPiScout.com solves this problem by allowing you to search using descriptions like “A package that makes nice plots and visualizations.”

PYPISCOUT.COM • Shared by Florian Maas

Programming Advice I’d Give Myself 15 Years Ago

Marcus writes in depth about things he has learned in his coding career and wished he new earlier in his journey. Thoughts include fixing foot guns, understanding the pace-quality trade-off, sharpening your axe, and more. Associated HN Discussion.

MARCUS BUFFETT

Keeping Things in Sync: Derive vs Test

Don’t Repeat Yourself (DRY) is generally a good coding philosophy, but it shouldn’t be adhered to blindly. There are other alternatives, like using tests to make sure that duplication stays in sync. This article outlines the why and how of just that.

LUKE PLANT

8 Versions of UUID and When to Use Them

RFC 9562 outlines the structure of Universally Unique IDentifiers (UUIDs) and includes eight different versions. In this post, Nicole gives a quick intro to each kind so you don’t have to read the docs, and explains why you might choose each.

NICOLE TIETZ-SOKOLSKAYA

Defining Python Constants for Code Maintainability

In this video course, you’ll learn how to properly define constants in Python. By coding a bunch of practical example, you’ll also learn how Python constants can improve your code’s readability, reusability, and maintainability.

REAL PYTHON course

Django: Test for Pending Migrations

The makemigrations --check command tells you if there is missing migrations in your Django project, but you have to remember to run it. Adam suggests calling it from a test so it gets triggered as part of your CI/CD process.

ADAM JOHNSON

How to Maximize Your Experience at EuroPython 2024

Conferences can be overwhelming, with lots going on and lots of choices. This post talks about how to get the best experience at EuroPython, or any conference.

SANGARSHANAN

Polars vs. pandas: What’s the Difference?

Explore the key distinctions between Polars and Pandas, two data manipulation tools. Discover which framework suits your data processing needs best.

JODIE BURCHELL

An Overview of the Sparse Array Ecosystem for Python

An overview of the different options available for working with sparse arrays in Python.

HAMEER ABBASI

Projects & Code

Get Space Weather Data

GITHUB.COM/BEN-N93 • Shared by Ben Nour

Events

Weekly Real Python Office Hours Q&A (Virtual)

July 10, 2024

REALPYTHON.COM

PyCon Nigeria 2024

July 10 to July 14, 2024

PYCON.ORG

PyData Eindhoven 2024

July 11 to July 12, 2024

PYDATA.ORG

Python Atlanta

July 11 to July 12, 2024

MEETUP.COM

PyDelhi User Group Meetup

July 13, 2024

MEETUP.COM

DFW Pythoneers 2nd Saturday Teaching Meeting

July 13, 2024

MEETUP.COM

Happy Pythoning!

This was PyCoder’s Weekly Issue #637.

View in Browser »

[ Subscribe to 🐍 PyCoder’s Weekly 💌 – Get the best Python news, articles, and tutorials delivered to your inbox once a week >> Click here to learn more ]

Python Engineering at Microsoft

Python in Visual Studio Code – July 2024 Release

We’re excited to announce the July 2024 release of the Python and Jupyter extensions for Visual Studio Code!

This release includes the following announcements:

- Enhanced environment discovery with python-environment-tools

- Improved support for reStructuredText docstrings with Pylance

- Community contributed Pixi support

If you’re interested, you can check the full list of improvements in our changelogs for the Python, Jupyter and Pylance extensions.

Enhanced environment discovery with python-environment-tools

We are excited to introduce a new tool, python-environment-tools, designed to significantly enhance the speed of detecting global Python installations and Python virtual environments.

This tool leverages Rust to ensure a rapid and accurate discovery process. It also minimizes the number of Input/Output operations by collecting all necessary environment information at once, significantly enhancing the overall performance.

We are currently testing this new feature in the Python extension, running it in parallel with the existing support, to evaluate the new discovery performance. Consequently, you will see a new logging channel called Python Locator that shows the discovery times with this new tool.

This enhancement is part of our ongoing efforts to optimize the performance and efficiency of Python support in VS Code. Visit the python-environment-tools repo to learn more about this feature, ongoing work, and provide feedback!

Improved support for reStructuredText docstrings with Pylance

Pylance has improved support for rendering reStructuredText documentation strings (docstrings) on hover! RestructuredText (RST) is a popular format for documentation, and its syntax is sometimes used for the docstrings of Python packages.

This feature is in its early stages and is currently behind an experimental flag as we work to ensure it handles various Sphinx, Google Doc, and Epytext scenarios effectively. To try it out, you can enable the experimental setting python.analysis.supportRestructuredText.

Common packages where you might observe this change in their docstrings include pandas and scipy. Try this change out, and report any issues or feedback at the Pylance GitHub repository.

Note: This setting is currently experimental, but will likely be enabled by default in the future as it becomes more stabilized.

Community contributed Pixi support

Thanks to @baszalmstra, there is now support for Pixi environment detection in the Python extension! This work added a locator to detect Pixi environments in your workspace similar to other common environments such as Conda. Furthermore, if a Pixi environment is detected in your workspace, the environment will automatically be selected as your default environment.

We appreciate and look forward to continued collaboration with community members on bug fixes and enhancements to improve the Python experience!

Other Changes and Enhancements

We have also added small enhancements and fixed issues requested by users that should improve your experience working with Python and Jupyter Notebooks in Visual Studio Code. Some notable changes include:

- Smart Send with

Shift+Enteris now available in the VS Code Native REPL for Python (@vscode-python23638) - Support

pytestparameterized tests spanning multiple classes when calling the same setup function (@vscode-python#23535) - Bug fix to have load bar show during test discovery (@vscode-python#23537)

We would also like to extend special thanks to this month’s contributors:

- @covracer Restore execute bits on deactivate scripts in vscode-python#23620

- @nickwarters Activate Python extension when

.venvor.condais found in the workspace in vscode-python#23642 - @baszalmstra Add locator for Pixi environments in vscode-python#22968

- @DetachHead Add hook to

vscode-pytestto determine numberxdistworkers to use based on count of selected tests in vscode-python#23539

Call for Community Feedback

As we are planning and prioritizing future work, we value your feedback! Below are a few issues we would love feedback on:

- Design proposal for test coverage in (@vscode-python#22827)

Try out these new improvements by downloading the Python extension and the Jupyter extension from the Marketplace, or install them directly from the extensions view in Visual Studio Code (Ctrl + Shift + X or ⌘ + ⇧ + X). You can learn more about Python support in Visual Studio Code in the documentation. If you run into any problems or have suggestions, please file an issue on the Python VS Code GitHub page.

The post Python in Visual Studio Code – July 2024 Release appeared first on Python.

Real Python

Customize VS Code Settings

Visual Studio Code, is an open-source code editor available on all platforms. It’s also a great platform for Python development. The default settings in VS Code present a somewhat cluttered environment.

This Code Conversation with instructor Philipp Acsany is about learning how to customize the settings within the interface of VS Code. Having a clean digital workspace is an important part of your work life. Removing distractions and making code more readable can increase productivity and even help you spot bugs.

In this Code Conversation, you’ll learn how to:

- Work With User Settings

- Create a VS Code Profile

- Find and Adjust Specific Settings

- Clean Up the VS Code User Interface

- Export Your Profile to Re-use Across Installations

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

Django Weblog

Django security releases issued: 5.0.7 and 4.2.14

In accordance with our security release policy, the Django team is issuing releases for Django 5.0.7 and Django 4.2.14. These releases address the security issues detailed below. We encourage all users of Django to upgrade as soon as possible.

CVE-2024-38875: Potential denial-of-service in django.utils.html.urlize()

urlize() and urlizetrunc() were subject to a potential denial-of-service attack via certain inputs with a very large number of brackets.

Thanks to Elias Myllymäki for the report.

This issue has severity "moderate" according to the Django security policy.

CVE-2024-39329: Username enumeration through timing difference for users with unusable passwords

The django.contrib.auth.backends.ModelBackend.authenticate() method allowed remote attackers to enumerate users via a timing attack involving login requests for users with unusable passwords.

This issue has severity "low" according to the Django security policy.

CVE-2024-39330: Potential directory-traversal in django.core.files.storage.Storage.save()

Derived classes of the django.core.files.storage.Storage base class which override generate_filename() without replicating the file path validations existing in the parent class, allowed for potential directory-traversal via certain inputs when calling save().

Built-in Storage sub-classes were not affected by this vulnerability.

Thanks to Josh Schneier for the report.

This issue has severity "low" according to the Django security policy.

CVE-2024-39614: Potential denial-of-service in django.utils.translation.get_supported_language_variant()

get_supported_language_variant() was subject to a potential denial-of-service attack when used with very long strings containing specific characters.

To mitigate this vulnerability, the language code provided to get_supported_language_variant() is now parsed up to a maximum length of 500 characters.

Thanks to MProgrammer for the report.

This issue has severity "moderate" according to the Django security policy.

Affected supported versions

- Django main branch

- Django 5.1 (currently at beta status)

- Django 5.0

- Django 4.2

Resolution

Patches to resolve the issue have been applied to Django's main, 5.1, 5.0, and 4.2 branches. The patches may be obtained from the following changesets.

CVE-2024-38875: Potential denial-of-service in django.utils.html.urlize()

- On the main branch

- On the 5.1 branch

- On the 5.0 branch

- On the 4.2 branch

CVE-2024-39329: Username enumeration through timing difference for users with unusable passwords

- On the main branch

- On the 5.1 branch

- On the 5.0 branch

- On the 4.2 branch

CVE-2024-39330: Potential directory-traversal in django.core.files.storage.Storage.save()

- On the main branch

- On the 5.1 branch

- On the 5.0 branch

- On the 4.2 branch

CVE-2024-39614: Potential denial-of-service in django.utils.translation.get_supported_language_variant()

- On the main branch

- On the 5.1 branch

- On the 5.0 branch

- On the 4.2 branch

The following releases have been issued

- Django 5.0.7 (download Django 5.0.7 | 5.0.7 checksums)

- Django 4.2.14 (download Django 4.2.14 | 4.2.14 checksums)

The PGP key ID used for this release is Natalia Bidart: 2EE82A8D9470983E

General notes regarding security reporting

As always, we ask that potential security issues be reported via private email to security@djangoproject.com, and not via Django's Trac instance, nor via the Django Forum, nor via the django-developers list. Please see our security policies for further information.

Real Python

Quiz: Split Your Dataset With scikit-learn's train_test_split()

In this quiz, you’ll test your understanding of

how to use train_test_split() from the sklearn library.

By working through this quiz, you’ll revisit why you need to split your dataset in supervised machine learning, which subsets of the dataset you need for an unbiased evaluation of your model, how to use train_test_split() to split your data, and how to combine train_test_split() with prediction methods.

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

Python Bytes

#391 A weak episode

<strong>Topics covered in this episode:</strong><br> <ul> <li><a href="https://github.com/mwilliamson/python-vendorize"><strong>Vendorize packages from PyPI</strong></a></li> <li><a href="https://martinheinz.dev/blog/112"><strong>A Guide to Python's Weak References Using weakref Module</strong></a></li> <li><a href="https://github.com/Proteusiq/saa"><strong>Making Time Speak</strong></a></li> <li><a href="https://towardsdatascience.com/how-should-you-test-your-machine-learning-project-a-beginners-guide-2e22da5a9bfc"><strong>How Should You Test Your Machine Learning Project? A Beginner’s Guide</strong></a></li> <li><strong>Extras</strong></li> <li><strong>Joke</strong></li> <li><strong>Extras</strong></li> <li><strong>Joke</strong></li> </ul><a href='https://www.youtube.com/watch?v=c8RSsydIhhs' style='font-weight: bold;'data-umami-event="Livestream-Past" data-umami-event-episode="391">Watch on YouTube</a><br> <p><strong>About the show</strong></p> <p>Sponsored by <strong>Code Comments</strong>, an original podcast from RedHat: <a href="https://pythonbytes.fm/code-comments">pythonbytes.fm/code-comments</a></p> <p><strong>Connect with the hosts</strong></p> <ul> <li>Michael: <a href="https://fosstodon.org/@mkennedy"><strong>@mkennedy@fosstodon.org</strong></a></li> <li>Brian: <a href="https://fosstodon.org/@brianokken"><strong>@brianokken@fosstodon.org</strong></a></li> <li>Show: <a href="https://fosstodon.org/@pythonbytes"><strong>@pythonbytes@fosstodon.org</strong></a></li> </ul> <p>Join us on YouTube at <a href="https://pythonbytes.fm/stream/live"><strong>pythonbytes.fm/live</strong></a> to be part of the audience. Usually Tuesdays at 10am PT. Older video versions available there too.</p> <p>Finally, if you want an artisanal, hand-crafted digest of every week of the show notes in email form? Add your name and email to <a href="https://pythonbytes.fm/friends-of-the-show">our friends of the show list</a>, we'll never share it.</p> <p><strong>Michael #1:</strong> <a href="https://github.com/mwilliamson/python-vendorize"><strong>Vendorize packages from PyPI</strong></a></p> <ul> <li>Allows pure-Python dependencies to be vendorized: that is, the Python source of the dependency is copied into your own package.</li> <li>Best used for small, pure-Python dependencies</li> </ul> <p><strong>Brian #2:</strong> <a href="https://martinheinz.dev/blog/112"><strong>A Guide to Python's Weak References Using weakref Module</strong></a></p> <ul> <li>Martin Heinz</li> <li>Very cool discussion of weakref</li> <li>Quick garbage collection intro, and how references and weak references are used.</li> <li>Using weak references to build data structures. <ul> <li>Example of two kinds of trees</li> </ul></li> <li>Implementing the Observer pattern</li> <li>How logging and OrderedDict use weak references</li> </ul> <p><strong>Michael #3:</strong> <a href="https://github.com/Proteusiq/saa"><strong>Making Time Speak</strong></a></p> <ul> <li>by Prayson, a former guest and friend of the show</li> <li>Translating time into human-friendly spoken expressions</li> <li>Example: clock("11:15") # 'quarter past eleven' </li> <li>Features <ul> <li>Convert time into spoken expressions in various languages.</li> <li>Easy-to-use API with a simple and intuitive design.</li> <li>Pure Python implementation with no external dependencies.</li> <li>Extensible architecture for adding support for additional languages using the plugin design pattern.</li> </ul></li> </ul> <p><strong>Brian #4:</strong> <a href="https://towardsdatascience.com/how-should-you-test-your-machine-learning-project-a-beginners-guide-2e22da5a9bfc"><strong>How Should You Test Your Machine Learning Project? A Beginner’s Guide</strong></a></p> <ul> <li>François Porcher</li> <li>Using pytest and pytest-cov for testing machine learning projects</li> <li>Lots of pieces can and should be tested just as normal functions. <ul> <li>Example of testing a clean_text(text: str) -> str function</li> </ul></li> <li>Test larger chunks with canned input and expected output. <ul> <li>Example test_tokenize_text()</li> </ul></li> <li>Using fixtures for larger reusable components in testing <ul> <li>Example fixture: bert_tokenizer() with pretrained data</li> </ul></li> <li>Checking coverage</li> </ul> <p><strong>Extras</strong> </p> <p>Michael:</p> <ul> <li><a href="https://www.macrumors.com/2024/07/05/authy-app-hack-exposes-phone-numbers/">Twilio Authy Hack</a> <ul> <li><a href="https://python-bytes-static.nyc3.digitaloceanspaces.com/google-really.png">Google Authenticator is the only option</a>? Really?</li> <li><a href="https://bitwarden.com">Bitwarden to the rescue</a></li> <li>Requires (?) an <a href="https://apps.apple.com/us/app/twilio-authy/id494168017">update to their app</a>, whose release notes (v26.1.0) only say “Bug fixes”</li> </ul></li> <li><a href="https://9to5mac.com/2024/07/03/proton-drive-gets-collaborative-docs-end-to-end-encryption/">Introducing Docs in Proton Drive</a> <ul> <li>This is what I called on Mozilla to do in “<a href="https://mkennedy.codes/posts/michael-kennedys-unsolicited-advice-for-mozilla-and-firefox/">Unsolicited</a><a href="https://mkennedy.codes/posts/michael-kennedys-unsolicited-advice-for-mozilla-and-firefox/"> Advice for Mozilla and Firefox</a>” But Proton got there first</li> </ul></li> <li>Early bird ending for <a href="https://www.codeinacastle.com/python-zero-to-hero-2024?utm_source=pythonbytes">Code in a Castle course</a></li> </ul> <p><strong>Joke:</strong> <a href="https://devhumor.com/media/in-rust-i-trust">I Lied</a></p>

July 08, 2024

Real Python

Python News Roundup: July 2024

Summer isn’t all holidays and lazy days at the beach. Over the last month, two important players in the data science ecosystem released new major versions. NumPy published version 2.0, which comes with several improvements but also some breaking changes. At the same time, Polars reached its version 1.0 milestone and is now considered production-ready.

PyCon US was hosted in Pittsburgh, Pennsylvania in May. The conference is an important meeting spot for the community and sparked some new ideas and discussions. You can read about some of these in PSF’s coverage of the Python Language Summit, and watch some of the videos posted from the conference.

Dive in to learn more about the most important Python news from the last month.

NumPy Version 2.0

NumPy is a foundational package in the data science space. The library provides in-memory N-dimensional arrays and many functions for fast operations on those arrays.

Many libraries in the ecosystem use NumPy under the hood, including pandas, SciPy, and scikit-learn. The NumPy package has been around for close to twenty years and has played an important role in the rising popularity of Python among data scientists.

The new version 2.0 of NumPy is an important milestone, which adds an improved string type, cleans up the library, and improves performance. However, it comes with some changes that may affect your code.

The biggest breaking changes happen in the C-API of NumPy. Typically, this won’t affect you directly, but it can affect other libraries that you rely on. The community has rallied strongly and most of the bigger packages already support NumPy 2.0. You can check NumPy’s table of ecosystem support for details.

One of the main reasons for using NumPy is that the library can do fast and convenient array operations. For a simple example, the following code calculates square numbers:

>>> numbers = range(10)

>>> [number**2 for number in numbers]

[0, 1, 4, 9, 16, 25, 36, 49, 64, 81]

>>> import numpy as np

>>> numbers = np.arange(10)

>>> numbers**2

array([ 0, 1, 4, 9, 16, 25, 36, 49, 64, 81])

First, you use range() and a list comprehension to calculate the first ten square numbers in pure Python. Then, you repeat the calculation with NumPy. Note that you don’t need to explicitly spell out the loop. NumPy handles that for you under the hood.

Furthermore, the NumPy version will be considerably faster, especially for bigger arrays of numbers. One of the secrets to this speed is that NumPy arrays are limited to having one data type, while a Python list can be heterogeneous. One list can contain elements as different as integers, floats, strings, and even nested lists. That’s not possible in a NumPy array.

Improved String Handling

By enforcing all elements to be of the same type that take up the same number of bytes in memory, NumPy can quickly find and work with individual elements. One downside to this has been that strings can be awkward to work with:

>>> words = np.array(["numpy", "python"])

>>> words

array(['numpy', 'python'], dtype='<U6')

>>> words[1] = "monty python"

>>> words

array(['numpy', 'monty '], dtype='<U6')

You first create an array consisting of two strings. Note that NumPy automatically detects that the longest string is six characters long, so it sets aside space for each string to be six characters long. The 6 in the data type string, <U6, indicates this.

Next, you try to replace the second string with a longer string. Unfortunately, only the first six characters are stored since that’s how much space NumPy has set aside for each string in this array. There are ways to work around these limitations, but in NumPy 2.0, you can take advantage of variable length strings instead:

Read the full article at https://realpython.com/python-news-july-2024/ »

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

Anwesha Das

Euro Python 2024

It is July, and it is time for Euro Python, and 2024 is my first Euro Python. Some busy days are on the way. Like every other conference, I have my diary, and the conference days are full of various activities.

Day 0 of the main conference

After a long time, I will give a legal talk. We are going to dig into some basics of Intellectual Property. What is it? Why do we need it? What are the different kinds of intellectual property? It is a legal talk designed for developers. So, anyone and everyone from the community with previous knowledge can understand the content and use it to understand their fundamental rights and duties as developers.Intellectual Property 101, the talk is scheduled at 11:35 hrs.

Day 1 of the main conference

Day 1 is PyLadies Day, a day dedicated to PyLadies. We have crafted the day with several different kinds of events. The day opens with a self-defense workshop at 10:30 hrs. PyLadies, throughout the world, aims to provide and foster a safe space for women and friends in the Python Community. This workshop is an extension of that goal. We will learn how to deal with challenging, inappropriate behavior.

In the community, at work, or in any social space. We will have a trained Psychologist as a session guide to help us. This workshop is so important, especially today as it was yesterday and may be in the future (at least until the enforcement of CoC is clear). I am so looking forward to the workshop. Thank you, Mia, Lias and all the PyLadies for organizing this and giving shape to my long-cherished dream.

Then we have my favorite part of the conference, PyLadies Lunch. I crafted the afternoon with a little introduction session, shout-out session, food, fun, laughter, and friends.

After the PyLadies Lunch, I have my only non-PyLadies session, which is a panel discussion on Open Source Sustainability. We will discuss the different aspects of sustainability in the open source space and community.

Again, it is PyLady&aposs time. Here, we have two sessions.

[IAmRemarkable](https://ep2024.europython.eu/pyladies-events#iamremarkable), to help you learn to empower you by celebrating your achievements and to fight your impostor syndrome. The workshop will help you celebrate your accomplishments and improve your self-promotion skills.

The second session is a 1:1 mentoring event, Meet & Greet with PyLadies. Here, the willing PyLadies will be able to mentor and be mentored. They can be coached in different subjects, starting with programming, learning, things related to job and/or career, etc.

Birds of feather session on Release Management of Open Source projects

It is an open discussion related to the release Management of the Open Source ecosystem.

The discussion includes everything from a community-led project to projects maintained/initiated by a big enterprise, a project maintained by one contributor to a project with several hundreds of contributor bases. What are the different methods we follow regarding versioning, release cadence, and the process itself? Do most of us follow manual processes or depend on automated ones? What works and what does not, and how can we improve our lives? What are the significant points that make the difference? We will discuss and cover the following topics: release management of open source projects, security, automation, CI usage, and documentation. In the discussion, I will share my release automation journey with Ansible. We will follow Chatham House Rules during the discussion to provide the space for open, frank, and collaborative conversation.

So, here comes the days of code, collaboration, and community. See you all there.

PS: I miss my little Py-Lady volunteering at the booth.

Talk Python to Me

#469: PuePy: Reactive frontend framework in Python

Python is one of the most popular languages of the current era. It dominates data science, it an incredible choice for web development, and its many people's first language. But it's not super great on front-end programing, is it? Frameworks like React, Vue and other JavaScript frameworks rule the browser and few other languages even get a chance to play there. But with pyscript, which I've covered several times on this show, we have the possibility of Python on the front end. Yet it's not really a front end framework, just a runtime in the browser. That's why I'm excited to have Ken Kinder on the podcast to talk about his project PuePy, a reactive frontend framework in Python.<br/> <br/> <strong>Episode sponsors</strong><br/> <br/> <a href='https://talkpython.fm/sentry'>Sentry Error Monitoring, Code TALKPYTHON</a><br> <a href='https://talkpython.fm/code-comments'>Code Comments</a><br> <a href='https://talkpython.fm/training'>Talk Python Courses</a><br/> <br/> <strong>Links from the show</strong><br/> <br/> <div><b>Michael's Code in a Castle Course</b>: <a href="https://talkpython.fm/castle" target="_blank" rel="noopener">talkpython.fm/castle</a><br/> <br/> <b>Ken Kinder</b>: <a href="https://twit.social/@bouncing" target="_blank" rel="noopener">@bouncing@twit.social</a><br/> <b>PuePy</b>: <a href="https://puepy.dev/" target="_blank" rel="noopener">puepy.dev</a><br/> <b>PuePy Docs</b>: <a href="https://docs.puepy.dev/" target="_blank" rel="noopener">docs.puepy.dev</a><br/> <b>PuePy on Github</b>: <a href="https://github.com/kkinder/puepy" target="_blank" rel="noopener">github.com</a><br/> <b>pyscript</b>: <a href="https://pyscript.net" target="_blank" rel="noopener">pyscript.net</a><br/> <b>VueJS</b>: <a href="https://vuejs.org" target="_blank" rel="noopener">vuejs.org</a><br/> <b>Hello World example</b>: <a href="https://docs.puepy.dev/hello-world.html" target="_blank" rel="noopener">docs.puepy.dev</a><br/> <b>Tutorial</b>: <a href="https://docs.puepy.dev/tutorial.html" target="_blank" rel="noopener">docs.puepy.dev</a><br/> <b>Tutorial running at pyscript.com</b>: <a href="https://pyscript.com/@kkinder/puepy-tutorial/latest" target="_blank" rel="noopener">pyscript.com</a><br/> <b>Micropython</b>: <a href="https://micropython.org" target="_blank" rel="noopener">micropython.org</a><br/> <b>Pyodide</b>: <a href="https://pyodide.org/en/stable/" target="_blank" rel="noopener">pyodide.org</a><br/> <b>PgQueuer</b>: <a href="https://github.com/janbjorge/PgQueuer" target="_blank" rel="noopener">github.com</a><br/> <b>Writerside</b>: <a href="https://www.jetbrains.com/writerside/" target="_blank" rel="noopener">jetbrains.com</a><br/> <br/> <b>Michael's PWA pyscript app</b>: <a href="https://github.com/mikeckennedy/pyscript-pwa-example" target="_blank" rel="noopener">github.com</a><br/> <b>Michael's demo of a PWA pyscript app</b>: <a href="https://www.youtube.com/watch?v=lC2jUeDKv-s" target="_blank" rel="noopener">youtube.com</a><br/> <b>Python iOS Web App with pyscript and offline PWAs video</b>: <a href="https://www.youtube.com/watch?v=Nct0usblj64" target="_blank" rel="noopener">youtube.com</a><br/> <b>Watch this episode on YouTube</b>: <a href="https://www.youtube.com/watch?v=-mbVh24qQmA" target="_blank" rel="noopener">youtube.com</a><br/> <b>Episode transcripts</b>: <a href="https://talkpython.fm/episodes/transcript/469/puepy-reactive-frontend-framework-in-python" target="_blank" rel="noopener">talkpython.fm</a><br/> <br/> <b>--- Stay in touch with us ---</b><br/> <b>Subscribe to us on YouTube</b>: <a href="https://talkpython.fm/youtube" target="_blank" rel="noopener">youtube.com</a><br/> <b>Follow Talk Python on Mastodon</b>: <a href="https://fosstodon.org/web/@talkpython" target="_blank" rel="noopener"><i class="fa-brands fa-mastodon"></i>talkpython</a><br/> <b>Follow Michael on Mastodon</b>: <a href="https://fosstodon.org/web/@mkennedy" target="_blank" rel="noopener"><i class="fa-brands fa-mastodon"></i>mkennedy</a><br/></div>

Zato Blog

Integrating with WordPress and Elementor API webhooks

Integrating with WordPress and Elementor API webhooks

Overview

Consider this scenario:

- You have a WordPress instance, possibly installed in your own internal network

- With WordPress, you use Elementor, a popular website builder

- A user fills out a form that you prepared using Elementor, e.g. the user provides his or her email and username to create an account in your CRM

- Now, after WordPress processes this information accordingly, you also need to send it all to a remote backend system that only accepts JSON messages

The concern here is that WordPress alone will not send it to the backend system.

Hence, we are going to use an Elementor-based webhook that will invoke Zato which will be acting as an integration layer. In Zato, we will use Python to transform the results of what was submitted in the form - in order to deliver it to the backend API system using a REST call.

Creating a channel

A Zato channel is a way to describe the configuration of a particular API endpoint. In this case, to accept data from WordPress, we are going to use REST channels:

In the screenshot below, note particularly the highlighted data format field. Typically, REST channels will use JSON, but here, we need to use "Form data" because this is what we are getting from Elementor.

Now, we can add the actual code to accept the data and to communicate with the remote, backend system.

Python code

Here is the Python code and what follows is an explanation of how it works:

# -*- coding: utf-8 -*-

# Zato

from zato.server.service import Service

# Field configuration

field_email = 'fields[email][value]'

field_username = 'fields[username][value]'

class CreateAccount(Service):

# The input that we expect from WordPress, i.e. what fields it needs to send

input = field_email, field_username

def handle(self):

# This is a dictionary object with data from WordPress ..

input = self.request.input

# .. so we can use dictionary access to extract values received ..

email = input[field_email]

username = input[field_username]

# .. now, we can create a JSON request for the backend system ..

# .. again, using a regular Python dictionary ..

api_request = {

'Email': email

'Username': username

}

# Obtain a connection to the backend system ..

conn = self.out.rest['CRM'].conn

# .. invoke that system ..

# Invoke the resource providing all the information on input

response = conn.post(self.cid, api_request)

# .. and log the response received ..

self.logger.info('Backend response -> %s', response.data)

The format of data that Elementor will use is of a specific nature. It is not JSON and the field names are not sent directly either.

That is, if your form has fields such as "email" and "username", what the webhook sends is named differently. The names will be, respectively:

- fields[email][value]

- fields[username][value]

If it were JSON, we could say that instead of this ..

.. the webhook was sending to you that:

The above format explains why in the Python code below we are extracting all the input fields from WordPress using the "self.request.input" object using its dictionary access syntax method.

Normally, if the field was plain "username", we would be doing "self.request.input.username" but this is not available in this case because of the naming conventions of the fields from Elementor.

Now, the only remaining part is the definition of the outgoing REST connection that service should use.

Outgoing REST connections

Create an outgoing REST connection as below - this time around, note that the data format is JSON.

Using the REST channel

In your WordPress dashboard, create a webhook using Elementor and point it to the channel created earlier, e.g. make Elementor invoke an address such as http://10.157.11.39:11223/api/wordpress/create-account

Each time a form is submitted, its contents will go to Zato, your service will transform it to JSON and the backend CRM system will be invoked.

And this is everything - you have just integrated WordPress, Elementor webhooks and an external API backend system in Python.

More resources

➤ Python API integration tutorial

➤ What is an integration platform?

➤ Python Integration platform as a Service (iPaaS)

➤ What is an Enterprise Service Bus (ESB)? What is SOA?

Wingware

Wing Python IDE Version 10.0.5 - July 8, 2024

Wing 10.0.5 adds support for running the IDE on arm64 Linux, updates the German language UI localization, changes the default OpenAI model to lower cost and better performing gpt-4o, and fixes several bugs.

See the change log for details.

Download Wing 10 Now: Wing Pro | Wing Personal | Wing 101 | Compare Products

What's New in Wing 10

What's New in Wing 10

AI Assisted Development

Wing Pro 10 takes advantage of recent advances in the capabilities of generative AI to provide powerful AI assisted development, including AI code suggestion, AI driven code refactoring, description-driven development, and AI chat. You can ask Wing to use AI to (1) implement missing code at the current input position, (2) refactor, enhance, or extend existing code by describing the changes that you want to make, (3) write new code from a description of its functionality and design, or (4) chat in order to work through understanding and making changes to code.

Examples of requests you can make include:

"Add a docstring to this method" "Create unit tests for class SearchEngine" "Add a phone number field to the Person class" "Clean up this code" "Convert this into a Python generator" "Create an RPC server that exposes all the public methods in class BuildingManager" "Change this method to wait asynchronously for data and return the result with a callback" "Rewrite this threaded code to instead run asynchronously"

Yes, really!

Your role changes to one of directing an intelligent assistant capable of completing a wide range of programming tasks in relatively short periods of time. Instead of typing out code by hand every step of the way, you are essentially directing someone else to work through the details of manageable steps in the software development process.

Support for Python 3.12 and ARM64 Linux

Wing 10 adds support for Python 3.12, including (1) faster debugging with PEP 669 low impact monitoring API, (2) PEP 695 parameterized classes, functions and methods, (3) PEP 695 type statements, and (4) PEP 701 style f-strings.

Wing 10 also adds support for running Wing on ARM64 Linux systems.

Poetry Package Management

Wing Pro 10 adds support for Poetry package management in the New Project dialog and the Packages tool in the Tools menu. Poetry is an easy-to-use cross-platform dependency and package manager for Python, similar to pipenv.

Ruff Code Warnings & Reformatting

Wing Pro 10 adds support for Ruff as an external code checker in the Code Warnings tool, accessed from the Tools menu. Ruff can also be used as a code reformatter in the Source > Reformatting menu group. Ruff is an incredibly fast Python code checker that can replace or supplement flake8, pylint, pep8, and mypy.

Try Wing 10 Now!

Try Wing 10 Now!

Wing 10 is a ground-breaking new release in Wingware's Python IDE product line. Find out how Wing 10 can turbocharge your Python development by trying it today.

Downloads: Wing Pro | Wing Personal | Wing 101 | Compare Products

See Upgrading for details on upgrading from Wing 9 and earlier, and Migrating from Older Versions for a list of compatibility notes.

July 07, 2024

Robin Wilson

Who reads my blog? Send me an email or comment if you do!

I’m interested to find out who is reading my blog. Following the lead of Jamie Tanna who was in turn copying Terence Eden (both of whose blogs I read), I’d like to ask people who read this to drop me an email or leave a comment on this post if you read this blog and see this post. I have basic analytics on this blog, and I seem to get a reasonable number of views – but I don’t know how many of those are real people, and how many are bots etc.

Feel free to just say hi, but if you have chance then I’d love to find out a bit more about you and how you read this. Specifically, feel free to answer any or all of the following questions:

- Do you read this on the website or via RSS?

- Do you check regularly/occasionally for new posts, or do you follow me on social media (if so, which one?) to see new posts?

- How did you find my blog in the first place?

- Are you interested in and/or working in one of my specialisms – like geospatial data, general data science/data processing, Python or similar?

- Which posts do you find most interesting? Programming posts on how to do things? Geographic analyses? Book reviews? Rare unusual posts (disability, recipes etc)?

- Have you met me in real life?

- Is there anything particular you’d like me to write about?

The comments box should be just below here, and my email is robin@rtwilson.com

Thanks!

July 06, 2024

Carl Trachte

Graphviz - Editing a DAG Hamilton Graph dot File

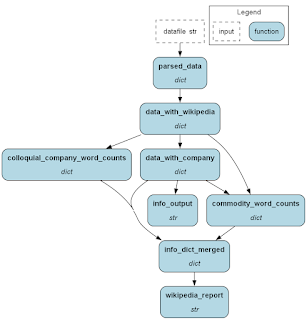

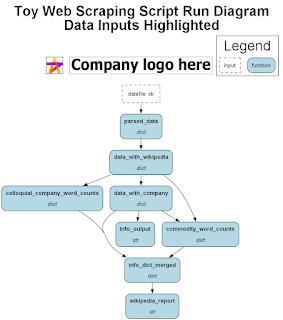

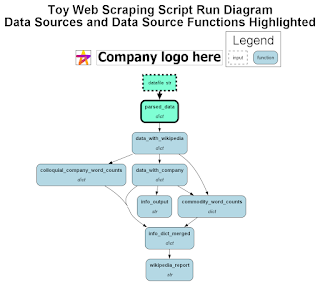

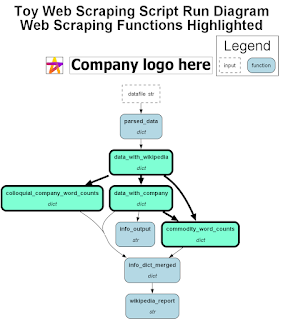

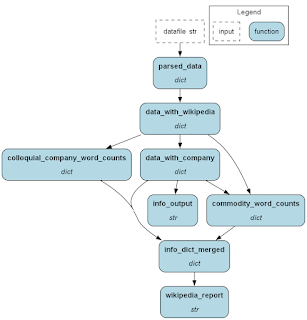

Last post featured the DAG Hamilton generated graphviz graph shown below. I'll be dressing this up a little and highlighting some functionality. For the toy example here, the script employed is a bit of overkill. For a bigger workflow, it may come in handy.

1) A Hamilton logo and a would be company logo get added (manual; the Data Inputs Highlighted subtitle is there for later processing when we highlight functionality.)

2) through 4) are done programmatically (code is shown further down). I saw an example on the Hamilton web pages that used aquamarine as the highlight color; I liked that, so I stuck with it.

2) Data source and data source function highlighted.

In the web scraping highlighted diagram, you can pretty clearly see that data_with_company node has an input into the commodity_word_counts node. The domain specific rationale from the last blog post is that I don't want to count every "Barrick Gold" company name occurrence as another mention of "Gold" or "gold."

Toy example notwithstanding, in real life, being able to show where something branches critically is a real help. Assumptions about what a script is actually doing versus what it is doing can actually be costly in terms of time and productivity for all parties. Being able to say and show ideas like, "What it's doing over here doesn't carry over to that other mission critical part you're really concerned with; it's only for purposes of the visualization which lies over here on the diagram" or "This node up here representing <the real life thing> is your sole source of input for this script; it is not looking at <other real world thing> at all."

Enough rationalization and qualifying - on to the config and the code!

I added the title and logos manually. The assumption that the graphviz dot file output of DAG Hamilton will always be in the format shown would be premature and probably wrong. It's an implementation detail subject to change and not a feature. That said, I needed some features in my graph outputs and I achieved them this one time.

Towards the top of the dot file is where the title goes:

labelalloc="t" puts the text at the top of the graph (t for top, I think).

The DAG Hamilton logo listed first appears to end up in the upper left part of the diagram most of the time (this is an empirical observation on my part; I don't have a super great handle on the internals of graphviz yet).

Getting the company logo next to it requires a bit more effort. A StackOverflow exchange had a suggestion of connecting it invisibly to an initial node. In this case, that would be the data source. Inputs in DAG Hamilton don't get listed in the graphviz dot file by their names, but rather by the node or nodes they are connected to: _parsed_data_inputs instead of "datafile" like you might expect. I have a preference for listing my input nodes only once (deduplicate_inputs=True is the keyword argument to DAG Hamilton's driver object's display_all_functions method that makes the graph).

The change is about one third of the way down the dot file where the node connection edges start getting listed:

DAG Hamilton has a dashed box for script inputs. That's why there is all that extra description inside the square brackets for that node. I manually added the fillcolor="#ffffff" at the end. It's not necessary for the chart (I believe the default fill of white /#ffffff was specified near the top of the file), but it is necessary for the code I wrote to replace the existing color with something else. Otherwise, it does not affect the output.

I think that's it for manual prep.

Onto the code. Both DAG Hamilton and graphviz have API's for customizing the graphviz dot file output. I've opted to approach this with brute force text processing. For my needs, this is the best option. YMMV. In general, text processing any code or configuration tends to be brittle. It worked this time.

Thanks for stopping by.

July 05, 2024

TestDriven.io

Developing GraphQL APIs in Django with Strawberry

This tutorial details how to integrate GraphQL with Django using Strawberry.

Real Python

The Real Python Podcast – Episode #211: Python Doesn't Round Numbers the Way You Might Think

Does Python round numbers the same way you learned back in math class? You might be surprised by the default method Python uses and the variety of ways to round numbers in Python. Christopher Trudeau is back on the show this week, bringing another batch of PyCoder's Weekly articles and projects.

[ Improve Your Python With 🐍 Python Tricks 💌 – Get a short & sweet Python Trick delivered to your inbox every couple of days. >> Click here to learn more and see examples ]

The Python Show

Dashboards in Python with Streamlit

This week, I chatted with Channin Nantasenamat about Python and the Streamlit web framework.

Specifically, we chatted about the following topics:

Python packages

Streamlit

Teaching bioinformatics

Differences in data science disciplines

Being a YouTuber

and much more!

Links

Data Professor YouTube Channel

Follow Channin on X / Twitter

July 04, 2024

Carl Trachte

DAG Hamilton Workflow for Toy Text Processing Script

Hello. It's been a minute.

I was fortunate to attend PYCON US in Pittsburgh earlier this year. DAGWorks had a booth on the expo floor where I discovered Hamilton. The project grabbed my attention as something that could help organize and present my code workflow better. My reaction could be compared to browsing Walmart while picking up a hardware item and seeing the perfect storage medium for your clothes or crafts at a bargain price, but even better, having someone there to explain the whole thing to you. The folks at the booth were really helpful.

Pictured below is the Hamilton flow in the graphviz output format the project uses for flowcharts (graphviz has been around for decades - an oldie but goodie as it were).

I start with a csv file that has some really basic data on three big American metal mines (I did have to research the Wikipedia addresses - for instance, I originally looked for the Goldstrike Mine under the name "Post-Betze." It goes by several different names and encompasses several mines - more on that anon):

Basically, I am going to attempt to scrape Wikipedia for information on who owns the three mines. Then I will try to use heuristics to gather information on what I think I know about them and gauge how up to date the Wikipedia information is.

Hamilton uses a system whereby you name your functions in a noun-like fashion ("def stuff()" instead of "def getstuff()") and feed those names as variables to the other functions in the workflow as parameters. This is what allows the tool to check your workflow for inconsistencies (types, for instance) and build the graphviz chart shown above.

You can use separate modules with functions and import them. I've done some of this on the bigger workflows I work with. Your Hamilton functions then end up being little one liners that call the bigger functions in the modules. This is necessary if you have functions you use repeatedly in your workflow that take different values at different stages. For this toy project, I've kept the whole thing self contained in one module toyscriptiii.py (yes, the iii in the filename represents my multiple failed attempts at web scraping and text processing - it's harder than it looks).

Below is the Hamilton main file run.py (I believe the "run.py" name is convention.) I have done my best to preserve the dictionary return values as "faux immutable" through use of the copy module in each function. This helps me in debugging and examining output, much of which can be done from the run.py file (all the return values are stored in a dictionary). I've worked with a dataset with about 600,000 rows that had about 10 nodes. My computer has 32GB of RAM (Windows 11); it handled memory fine (less than half). For really big data, keeping all these dictionaries in memory might be a problem.

The main toy module with functions configured for the Hamilton graph:

My REGEX abilities are somewhere between "I've heard the term REGEX and know regular expressions exist" and bracketed characters in each slot brute force. It worked for this toy example. Each Wikipedia page features the word "Company" followed by the name of the owning corporate entity.

Here is are the two text outputs the script produces from the information provided (Wikipedia articles from July, 2024):

Company names are relatively straightforward, although mining company and properties acquisitions and mergers being what they are, it can get complicated. I unwittingly chose three properties that Wikipedia reports as having one owner. Other big mines like Morenci, Arizona (copper) and Cortez, Nevada (gold) show more than one owner; that case is for another programming day. The Goldstrike information might be out of date - no mention of Nevada Gold Mines or Newmont (one mention, but in a different context). The Cortez Wikipedia page is more current, although it still doesn't mention Nevada Gold Mines.

The inclusion of colloquial association in the input csv file was an afterthought based on a lot of the Wikipedia information not being completely in line with what I thought I knew. Teck is the operator of the Red Dog Mine in Alaska. That name does get mentioned frequently in the Wikipedia article.

Enough mining stuff - it is a programming blog after all. Next time (not written yet) I hope to cover dressing up and highlighting the graphviz output a bit.

Thank you for stopping by.

Eli Bendersky

You don't need virtualenv in Go

Programmers that come to Go from Python often wonder "do I need something like virtualenv here?"

The short answer is NO; this post will provide some additional details.

While virtualenv in Python is useful in many situations, I think it'd be fair to divide them into two broad scenarios: for execution and for development. Let's see what Go offers for each of these scenarios.

Execution

There are multiple, mutually-incompatible versions of Python out in the wild. There are even multiple versions of the packaging tools (like pip). On top of this, different programs need different packages, often themselves with mutually-incompatible versions.

Python code typically expects to be installed, and expects to find packages it depends on installed in a central location. This can be an issue for systems where we don't have the permission to install packages/code to a central location.

All of this makes distributing Python applications quite tricky. It's common to use bundling tools like PyInstaller, but virtualenv is also a popular option [1].

Go is a statically compiled language, so this is a non-problem! Binaries are easy to build and distribute; the binary is a native executable for a given platform (just like a native executable built from C or C++ source), and has no dependencies on compiler or package versions. While you can install Go programs into a central location, you by no means have to do this. In fact, you typically don't have to install Go programs at all. Just invoke the binary.

It's also worth mentioning that Go has great cross-compilation support, making it easy to create binaries for multiple OSes from a single development machine.

Development

Consider the following situation: you're developing a package, which depends on N other packages at specific versions; e.g. you need package foo at version 1.2 or above. Your system may have an older version of foo installed - 0.9; you try to upgrade it to 1.2 and some other program breaks. Now, this all sounds very manageable for package foo - how hard can it be to upgrade the uses of this simple package?

Reality is more difficult. foo could be Django; your code depends on a new version, while some other critical systems depend on an old version. Good luck fixing this conundrum. In Python, viruatenv is a critical tool to make such situations manageable; newer tools like pipenv wrap virtualenv with more usability patterns.

How about Go?

If you're using Go modules, this situation is very easy to handle. In a way, a Go module serves as its own virtualenv. Your go.mod file specifies the exact versions of dependency packages needed for your development, and these versions don't mix up with packages you need to develop some other project (which has its own go.mod).

Moreover, Go module directives like replace make it easy to short-circuit dependencies to try local patches. While debugging your project you find that package foo has a bug that may be affecting you? Want to try a quick fix and see if you're right? No problem, just clone foo locally, apply a fix, and use a replace to use this locally patched foo. See this post for a few ways to automate this process.

What about different Go versions? Suppose you have to investigate a user report complaining that your code doesn't work with an older Go version. Or maybe you're curious to see how the upcoming beta release of a Go version will affect you. Go makes it easy to install different versions locally. These different versions have their own standard libraries that won't interfere with each other.

| [1] | Fun fact: this blog uses the Pelican static site generator. To regenerate the site I run Pelican in a virtualenv because I need a specific version of Pelican with some personal patches. |

Glyph Lefkowitz

Against Innovation Tokens

Updated 2024-07-04: After some discussion, added an epilogue going into more detail about the value of the distinction between the two types of tokens.

In 2015, Dan McKinley laid out a model for software teams selecting technologies. He proposed that each team have a limited supply of “innovation tokens”, and, when selecting a technology, they can choose boring ones for free but “innovative” ones cost a token. This implies that we all know which technologies are innovative, and we assume that they are inherently costly, so we want to restrict their supply.

That model has become popular to the point that it is now part of the vernacular. In many discussions, it is accepted as received wisdom, or even common sense.

In this post I aim to show you that despite being superficially helpful, this model is wrong, and in fact, may be counterproductive. I believe it is an attractive nuisance in computer programming discourse.

In fairness to Mr. McKinley, the model he described in this post is:

- nearly a decade old at this point, and

- much more nuanced in its description of the problem with “innovation” than the subsequent memetic mutation of the concept.

While I will be referencing McKinley’s post, and I do take some issue with it, I am reacting more strongly to the life of its own that this idea has taken on once it escaped its original context. There are a zillion worse posts rehashing this concept, on blogs and LinkedIn, but I won’t be linking to them because the goal is not to call anybody out.

To some extent I am re-raising McKinley’s own caveats and reinforcing them. So I may be arguing with a strawman, but it’s a strawman I have seen deployed with some regularity over the years.

To reduce it to its core, this strawman is “don’t use new or interesting technology, and if you have to, only use a little bit”.

Within the broader culture of programmers, an “innovation token” has become a shorthand to smear any technology perceived — almost always based on vibes, not data — as risky, and the adoption of novel approaches as pretentious and unserious. Speaking of programmer culture though, I do have to acknowledge there is also a pervasive tendency for us to get distracted by novelty and waste time on puzzles rather than problem-solving, so I understand where the reactionary attitude represented by the concept of an innovation token comes from.

But it is reactionary.

At its worst, it borders on anti-intellectualism. I have heard it used on more than one occasion as a thought-terminating cliche to discard a potentially promising new tool. But before I get into that, let me try to give a sympathetic summary of the idea, because the model is not entirely bad.

It has been popular for a long time because it does work okay as an heuristic.

The real problem that McKinley is describing is operational overhead. When programmers make a technology selection, we are often considering how difficult it will make the programming. Innovative technology selections are, by definition, less mature.

That lack of maturity — particularly in the open source world — often means that the project is in a part of its lifecycle where it is concerned with development affordances more than operational ones. Therefore, the stereotypical innovative project, even one which might legitimately be a big improvement to development velocity, will create more operational overhead. That operational overhead creates a hidden cost for the operations team later on.

This is a point I emphatically agree with. When selecting a technology, you should consider its ease of operation more than its ease of development. If your team is successful, they will be operating and maintaining it far longer than they are initially integrating and deploying it.

Furthermore, some operational overhead is inevitable. You will need to hire people to mitigate it. More popular, more mature projects will have a bigger talent pool to hire from, so your training costs will be lower, and those training costs are part of your operational cost too.

Rationing innovation tokens therefore can work as a reasonable heuristic, or proxy metric, for avoiding a mess of complex operational problems associated with dependencies that are expensive to operate and hard to hire for.

There are some minor issues I want to point out before getting to the overarching one.

- “has a lot of operational overhead” is a stereotype of a new technology, not an inherent property. If you want to reject a technology on the basis of being too high-overhead, at least look into its actual overhead a little bit. Sometimes, especially in 2024 as opposed to 2015, the point of a new, shiny piece of tech is to address operational issues that the more boring, older one had.

- “hard to learn” is also a stereotype; if “newer” meant “harder” then we

would all be using

troffrather than Google Docs. Actually ask if the innovativeness is making things harder or easier; don’t assume. - You are going to have to train people on your stack no matter what. If a technology is adding a lot of value, it’s absolutely worth hiring for general ability and making a plan to teach people about it. You are going to have to do this with the core technology of your product anyway.

As I said, though, these are minor issues. The big problem with modeling operational overhead as an “innovation token” is that an even bigger concern than selecting an innovative tool is selecting too many tools.

The impulse to select more tools and make your operational environment more complex can be made worse by trying to avoid innovative tools. The important thing is not “less innovation”, but more consistency. To illustrate this, let’s do a simple thought experiment.

Let’s say you’re going to make a web app. There’s a tool in Haskell that you really like for a critical part of your app’s problem domain. You don’t want to spend more than one innovation token though, and everything in Haskell is inherently innovative, so you write a little service that just does that one part and you write the rest of your app in Ruby, calling into that service whenever you need to use that thing. This will appropriately restrict your “innovation token” expenditure.

Does doing this actually reduce your operational overhead, though?

First, you will have to find a team that likes both Ruby and Haskell and sees no problem using both. If you are not familiar with the cultural proclivities of these languages, suffice it to say that this is unlikely. Hiring for Haskell programmers is hard because there are fewer of them than Ruby programmers, but hiring for polyglot Haskell/Ruby programmers who are happy to do either is going to be really hard.

Since you will need to find different people to write in the different languages, even in the best case scenario, you will have two teams: the Haskell team and the Ruby team. Even if you are incredibly disciplined about inter-service responsibilities, there will be some areas where duplication of code is necessary across those services. Disagreements will arise and every one of these disagreements will be a source of social friction and software defects.

Then, you need to set up separate CI pipelines for each language, separate deployment systems, and of course, separate databases. Right away you are effectively doubling your workload.

In the worse, and unfortunately more likely scenario, there will be enormous infighting between these two teams. Operational incidents will be more difficult to manage because rather than learning the Haskell tools for operational visibility and disseminating that institutional knowledge amongst your team, you will be half-learning the lessons from two separate ecosystems and attempting to integrate them. Every on-call engineer will be frantically trying to learn a language ecosystem they don’t use regularly, or you will double the size of your on-call rotation. The Ruby team may start to resent the Haskell team for getting to exclusively work on the fun parts of the problem while they are doing things that look more like rote grunt work.